AI and the Future of War

"We could easily double, and double again, the researchers working in this space to better understanding these issues."

AI safety is having a moment. To discuss why AI safety matters for national security, today I have on Paul Scharre (@Paul_scharre). He’s the Vice President and Director of Studies at CNAS. He previously served in OSD Policy and as a US Army Ranger.

We discuss:

What the future of war looks like as militaries around the world adopt AI technologies;

Why using AI in warfare isn't as easy as people think;

How supply chains can be used as a form of arms control;

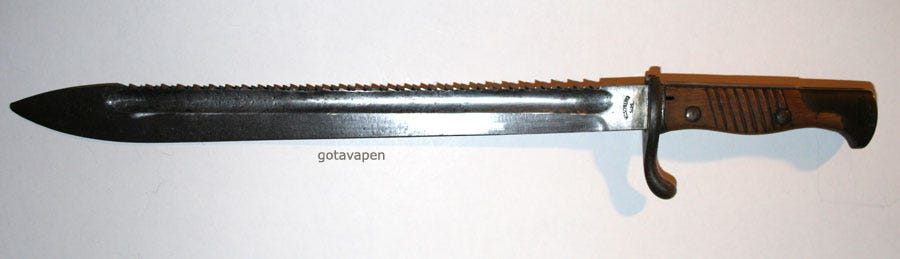

Historical weapons so horrible people simply didn't use them;

How to get a job at CNAS (they're hiring).

This newsletter edition was brought to you today by the Center for a New American Security.

ChinaTalk is a reader-supported publication. To receive new posts and support my work, consider becoming a free or paid subscriber!

AI-Assisted Warfare

Jordan Schneider: DALL-E, GPT-3, making oil paintings of Mao and Jesus talking to each other… Why does this matter for national security? How is AI impacting the war in Ukraine, for example?

Paul Scharre: Obviously we're seeing tremendous advances in state-of-the-art research labs (especially for language models or multi-modal generative models), but there is a big time-delay before implementation in industry. It’s almost longer in a military context, because there's funding issues for government and bureaucracy gets in the way.

There have been some rumors and reports about image classifiers being used by Russia and Ukraine. I think anyone that follows this technology knows that oftentimes, there's a pretty big gap between the “hype” about an AI system and what it's actually doing. I've learned over the years to be skeptical of initial claims. Probably the area that it is likely to have the biggest impact — behind the scenes, where it’s hard to see — is the type of intelligence support the US military is providing to Ukraine. The US military's first deployment of image classifiers was almost five years ago, through Project Maven back in late 2017, so I do think it's safe to say that there's probably some areas in which AI is being used today to support intelligence operations, in terms of analyzing satellite images and drone video feeds.

But I wouldn't want to overstate how significant that is today. When talking to people on the inside about how the US military is using AI to process intelligence, what I've heard is that a lot of these tools are impressive from a technical standpoint, but to transform operations to use this technology — which is the game changer for any new technology — is still a way down the road.

Jordan Schneider: Let's fast forward to the Great Taiwan War of 2028. How does AI impact the force structure and the way the conflict plays out?

Paul Scharre: Over the next six years or so, I think we're going to see increasing use of some of the AI tools that are fairly mature today. Natural language processing, predictive algorithms for things like predictive maintenance, certainly image classifiers to do object detection and recognition for drone feeds and satellite images, probably a lot of business process automation… Things that may not involve machine learning, but are simply automating tasks that humans are already doing and trying to speed them up.

That'll enable militaries to do things like compressed targeting cycles, which shortens the time it takes to get a piece of intelligence, understand it, pass it over to those making decisions about targeting, and then carry out some kind of attack [by] maybe several hours.

We're a long way from Skynet and AI systems running warfare in a very significant way.

Jordan Schneider: Let’s do the 15-plus horizon. What's the bull case for how impactful these technologies could end up being?

Paul Scharre: I think one of the interesting questions is: what happens when you have AI implemented across the space of military? It's the same way that we have other general-purpose technologies like electricity or computers integrated today. Over time, say in fifteen or twenty years’ horizon, I do think it's quite possible that you see AI systems being used to more rapidly analyze information, fuse it, pass it to decision makers who need it, and help them understand the battle space better.

Then, over time, we’ll see more intelligent, networked autonomous swarming, munitions, and platforms that can carry out operations, whether it's ConOps, operations, logistics, or kinetic strikes against various targets. All of that is likely to lead to a battle space that is more transparent, where it's harder to hide, where militaries have greater situational awareness, and that is faster-paced. The Industrial Revolution had a whole set of technologies that then transformed warfare over time in WWI and WWII and increased the physical scale and destructiveness in warfare: iron, steel, firepower… AI is likely to do similar things, but at the cognitive dimension of warfare [by] increasing the pace and tempo of military operations, in ways that, in the long term, could actually could be challenging for humans to deal with.

Risks of Military AI Applications

Jordan Schneider: You had a nice sentence recently: “AI systems could fail potentially in unexpected ways due to a variety of causes, including faulty training data, insufficient test, evaluation, verification and validation, improperly designed human machine interfaces, poor operator training, insufficient institutional attentive to safety, automation bias, unexpected interactions with the environment and human error, among other causes.”

Paul, pick your favorite three: what are the most important, and least tractable, messes that badly operationalized AI could lead armies into?

Paul Scharre: AI systems are powerful, but brittle. They can be quite effective performing various tasks, but when they're faced with some kind of novelty they often break badly. They generalize poorly and don't handle outage distribution examples very well, [especially] if they’re facing something in the real world that's not consistent with their training data.

Particularly in the military context, where you don't know where you're going be deployed, who you're going to fight against, your enemy is adaptive and adversarial, they're not going to put out their military systems for you to train your system on, and they're going to conceal things that they only use in war time, these things are tailor-made to break AI systems. If self-driving cars have trouble in a driving environment where we've mapped all the aspects of the environment down to the centimeter and people aren't trying to get hit by cars, a warzone environment is just much, much more challenging.

That doesn't even get into the security vulnerabilities: data poisoning, adversarial examples, and ways to manipulate these systems that obviously also matter a great deal in an adversarial context.

Jordan Schneider: Let’s move on to destabilization. How might these vulnerabilities interact with conflicts?

Paul Scharre: Some Chinese scholars have hypothesized about a singularity in warfare: a point in time in which the pace of combat operations exceeds humans' ability to keep up, and militaries have to effectively turn over control to machines just to remain effective on the battlefield. We've seen this in other industries like stock trading, where humans just can't possibly be involved as machines make trades in milliseconds. Some US scholars like John Allen and Amir Husain have referred to a similar concept called hyperwar, which is the idea that there's some kind of phase transition in the future towards a much more rapid, machine-driven tempo of war.

It's a possibility certainly, but one that would be deeply concerning. As we see militaries integrate more AI into their operations, not only do we need to think in the near term about things like reliability, but in the long term:

How do we ensure that we're keeping human control over warfare? That we don't lead to a point where humans are too disengaged from what's occurring on the battlefield?

Announcing a new course: A framework making it easier to read and understand Chinese language news.

A previous guest on the ChinaTalk podcast, Andrew Methven, who publishes the excellent Slow Chinese Newsletter, has just launched an online course sharing his system to make reading and understanding Chinese language news article easier and quicker.

The course is delivered live in six weekly sessions starting next Tuesday (4 October), in which Andrew shares an overview of the best Chinese language media sites to find good content, the framework he’s developed, and the process he uses to consume Chinese language media more efficiently. It’s aimed at anyone who needs to read Chinese language sources as part of their job, including analysts, journalists, think tankers and researchers focusing on China, or Chinese language graduates and advanced learners who want to accelerate their news reading ability.

Readers of ChinaTalk can register here for the course, claiming 10% off using the discount code “CT” at the checkout. Registration closes this Sunday.

Horrible Weapons: AI and Arms Control

Jordan Schneider: Let’s talk about the Cold War analogy you just alluded to. Arms control: is it even relevant in an AI context?

Paul Scharre: I think absolutely. Not all kinds of arms control involve preventing access to the technology. [The bans] on landmines and cluster munitions, for example, don't try to stop countries getting access to the underlying technology to build those weapons. Countries simply agree not to build those weapons, produce them, [or] deploy them. Countries could say, “Look, we're going to use AI in general, but there might be some AI applications we're not going to use.” In the late 19th and early 20th century, we saw attempts to do this with a lot of technologies coming out of the Industrial Revolution. To be fair, they had a mixed track record of success, but I do think that's one way to approach it. The other area is in computing hardware, where there are in fact much more tight supply chains and choke points in the underlying technology where it might be possible to actually limit access to certain actors, depending on how we see semiconductor supply chains evolve over time.

We have a report coming out at CNAS later this fall from myself and my colleague Megan Lamberth, that walks through the history of arms control and applies some lessons for artificial intelligence. One of the important takeaways is that attempts to control weapons date back to antiquity. The Mahābhārata has this line that, when translated, says, “There should be no arrows smeared with poison nor any barbed arrows. These are the weapons of evil people.”

These happen throughout history — again, to mixed effect — but there are many attempts by humans, even while we're killing each other, to say some weapons are beyond the pale. What are the lessons that we can learn from that? One is the importance of clear rules: that people clearly understand what is acceptable and what's not, that people are able to comply with those, that the perceived horribleness of the weapon outweighs its military value.

I think it's encouraging that even when they’re trying to kill each other, humans have historically sought ways to try to regulate and cooperate to ensure that the violence isn't worse than it needs to be.

AI is changing the command, control, and decision-making process of a system. How do you even know whether or not your enemy's using AI? How do you know whether this targeting decision was made by a human or not? There were reports of Russians using an autonomous weapon in Ukraine. Even if it were true, how do you prove that? If you don't know, why does it matter? It make agreements particularly challenging if you can't verify whether or not the other side is compliant.

Jordan Schneider: Is there a dirty-bomb equivalent when it comes to AI?

Paul Scharre: I don't know about a dirty-bomb equivalent. I do think that we are likely to see increasingly sophisticated use of drones and, over time, automation, autonomous, and even AI features embedded into these drones for attacks. ISIS had a pretty sophisticated drone capability: they were pretty effective in attacking Iraqi forces several years ago. They had a cell that used basically commercially available drone technology. Fast forward five, ten years from now, [and] you're likely to see more autonomous operations; over time, swarming features that involve drones working together; image classifiers used within these systems that make them more intelligent and cooperative; and harder targets on the defensive side.

[With] a drone that's just flying via remote control, you could jam the comms link. If you’re using GPS, you could jam the GPS or spoof it. But if it's relying on visually aided navigation, identifying objects, or swarming with other systems and able to counter their attacks… It’s probably not as consequential as a dirty bomb, but we already see pretty sophisticated use of robotic systems by non-state actors. Houthi rebels in Yemen are using long-range drones to attack Saudi Arabia; they've used drone boats, actually. I think over time, those are going to have more autonomous and AI features, and be more effective as a result.

Jordan Schneider: What's compute governance, and why does it matter?

Paul Scharre: The element of the AI stack where there's most potential to actually limit access, [like] algorithms for example, are going be really widely available. Supply chains right now are concentrated in a couple of key nodes and choke points that are largely controlled by either the United States or close allies and partners like Taiwan, South Korea, the Netherlands, and Japan. They control a lot of the underlying technology behind semiconductors and hardware needed in AI.

This geopolitical reality intersects with the fact that in AI, we've seen this huge growth in computing hardware needed for training these very large models for compute intensive models. That suggests there is an opportunity in thinking about governing computing, in a way that might help restrict access to dangerous AI capabilities to some actors if needed in the future.

As we've seen this growth in compute-intensive machine learning, the number of actors that can access cutting-edge machine learning has been shrinking over time. Three of the leading AI research labs, OpenAI, DeepMind, and Google Brain, are all backed by major multinationals in very deep pockets. From the standpoint of democratizing the technology and research, that's a problem. From the standpoint of limiting access to it, that trend is beneficial. It doesn't mean those actors are necessarily the ideal ones, but that trend suggests there might be ways to govern the technology.

We actually are launching a new project at CNAS to better understand this, as we see the technology evolving and governments getting really involved in industrial policy for semiconductors. Just the last few weeks, the United States government passed a major bill leveraging over $50 billion in subsidies for [the] semiconductor industry, reshoring fabs to the United States and investing in R7D. A little quieter, but also very significant, [is an] increase in export controls on manufacturing equipment to China.

How do these political realities intersect with the technology and where is AI going? That’s what we want to better understand.

How to Get A Job At CNAS

Jordan Schneider: If you made it to this point in the episode, you may be thinking, “huh, this stuff is kind of cool. Should I spend the next few years of my life on it?” Well, Paul’s hiring for a number of positions around AI safety and compute governance.

Paul Scharre: We're launching a new project on AI safety and stability! We're very grateful to Open Philanthropy for their support in doing so. It’s a center-wide, multi-year initiative with six new positions open at all levels from Project/Research Assistant to Senior Fellow. We're looking for people who are passionate about this issue and have excellent writing and analytical skills. Experience in AI & machine learning is not required: if you don't have that background, we can give you the tools necessary to get ready. We’ll invest in people taking additional training to get deep on the technology before they get underway in the project. We want people who are excited about these opportunities to shape the policy in this space, and to address some of these risks surrounding AI competition.

Jordan Schneider: I've been thinking about this in the China Studies context. In an ideal world, Paul, should there be a hundred folks exploring AI safety at think tanks and thinking about compute governance? Where does one hit diminishing returns for exploring emerging technologies and defense?

Paul Scharre: In the policy side I'd look to other areas, like nuclear stability or cybersecurity, where there are robust fields of policy practitioners that understand the technology very well and can make technically informed debates around policy. [Those fields have] vibrant intellectual communities that consist of people in academia, government, and think tanks, who are tackling the issue from a variety of different dimensions. I would love to, five years from now, see that getting off the ground in the AI policy space and identifying the key research questions. I think we're nowhere near the point of diminishing returns.

We could easily double, and double again, the researchers working in this space to better understanding these issues.

Jordan Schneider: I think the idea of a community is a really important point. If you have one person on a question, it's not particularly likely that that person is going to have the optimal answer. But having five people all working on the same question with you is additive because you have people to talk to about these things. You have a pretty positive multiplier going from one, to five, to twenty, to fifty even, before you really start hitting diminishing returns.

AGI Risks… Or Not?

Over the summer, I spent some time at an Effective Altruism conference in San Francisco. The amount of artificial general intelligence (AGI)-adjacent content I consumed and people I talked to frankly blew my mind. To what extent do you think the research errors that we talked about speak to that conversation? How might it relate to the Pentagon more broadly?

Paul Scharre: The national security community really doesn't think, or talk, about AGI, transformative AI, or whatever label you want for some hypothetically more powerful AI system in the future. People are much more focused on the implementation of AI technology today.

Policy issues tend to be very path dependent. If you look at lethal autonomous weapons, they’re have been debated since 2014 in a body at the United Nations called the Convention on Certain Conventional Weapons (CCW). Why are they being debated there? That's the body that existed; prior to that, it was used for debates around blinding lasers. When a new technology came along, this is the forum in which states address this technology. Autonomous weapons are effectively able to piggyback on prior technologies that states have come together to cooperate on.

If you are concerned about the long-term risks of more powerful AI systems, one way to approach that might be to say, “how can we find avenues for states to cooperate today to minimize AI risks?”

If that's not something you're focused on, I don't think it's necessary to be concerned about AGI risk. For what it's worth, I actually don't like the term AGI. I think it's very anthropocentric. AGI is often framed as what happens when we have an AI system that is human-level intelligent. Why would we assume that human intelligence is some specialized form of intelligence that machine learning systems are moving towards? I would not be surprised to find that 15 years from now, we have AI systems that are much more qualitatively advanced than we have now, and are better than humans in some areas and do worse in other areas.

Book Recommendations

Jordan Schneider: Book recommendations on technology and national security?

Paul Scharre: The first one would be John Keegan's A History of Warfare. It's a fascinating history of different ways societies engage in warfare throughout human history. [He argues that] war is a cultural activity and expression of society. I think it's a great way to think about war: it challenges the common paradigm in US defense circles. There's this phenomena in the last several decades in the US defense community, where people keep coming up with new labels of warfare: irregular warfare, unconventional warfare, asymmetric warfare, military operations other than war… And it makes you wonder, maybe we need to expand our horizons for what war is? I think Keegan's book is a great way to get a better picture of human behavior throughout war.

Next one I'd suggest would be Wesley Morgan's The Hardest Place, which traces the history of American military involvement in the Pech Valley in Afghanistan. It's a microcosm of how the US has been engaged in the conflict and how US involvement ebbed and flowed over time. It was personal to me: I spent time there early in the war. I think it's helpful for thinking about not just the history and lessons for future conflicts, but also counter-terrorism operations and drone strikes today.

The last one I'd suggest would be Thomas Schelling's Arms and Influence, which is a foundational book for understanding conflict and international relations. He talk in really accessible ways about things like deterrence and compellence. I think there are a lot of important lessons there for what’s going on in Ukraine or Taiwan.

Jordan Schneider: Since you shouted out John Keegan, I’ll throw in The Face of Battle. It is a grunt’s-eye view of three conflicts: Waterloo, Agincourt, and the Somme. The chapter that really stuck with me was actually the Agincourt one: the image of men literally running into each other, the physicality, and the awfulness of it. It's an interesting pair with a lot of his subsequent work, which goes up the chain and looks at the mask of command.

Keeping in mind that people are fighting and dying, in often really awful ways, seems to be really important to ground folks, especially as you hear a lot of loose words being thrown around in the China context.

ChinaTalk is a reader-supported publication. To receive new posts and support my work, consider becoming a free or paid subscriber!

Outro music is A.I. 爱 by 王力宏 Wang Leehom, a very much cancelled Taiwanese pop star: