ChatGPT: China's AI Researchers React

"China must have its own large foundational and large application models to say goodbye to 'Silicon Valley Worship'"

What does China’s AI industry think of OpenAI’s ChatGPT? Below are translations of some interviews with Chinese AI entrepreneurs and researchers published by the Chinese business press over the past week.

China Needs an OpenAI

From 钛媒体 TMT Post, a tech and media publication.

“China must have its own large foundational models and large application models. It’s very simple: as long as OpenAI’s key model isn’t open-source and we only have access to the API, China will not be able to use it easily. It’s already a bottleneck, so why aren’t we doing it?” [Tsinghua Computer Science Professor] Huang Minlie told TMT Post that many Chinese teams like Baidu and Lingxin [an AI mental health startup] are committing to invest relevant resources to solve issues [related to artificial intelligence-generated content (AIGC)].

In order to say goodbye to Silicon Valley-worship, China’s internet ecosystem needs to build its own ChatGPT with uniquely Chinese innovative characteristics, and even a Chinese AI firm that exceeds OpenAI in capability. This is an essential question for the development of China’s AI industry.

According to the report China’s AI Digital Industry Looks to 2021-2025, released by the Zhongguancun Big Data Industry Alliance, by 2025 the core pillar industry chain of China’s AI digital businesses will reach 185.3 billion yuan, with a compound growth rate of about 57.7% in the next five years. Guotai Junan, on the other hand, predicts that in the next five years, up to 30% of image content may be generated by AI, with a corresponding market size of more than 60 billion.

In China, the concept industry surrounding AIGC is growing steadily, and investors like MiraclePlus, Lenovo Capital, China Growth Capital, and Will Hunting Capital are either observing from a distance or paying attention. This December, digital brand AVAR, which generates 3D content via AI, received angel investment, and has raised three rounds of funds within one year of founding; Kuayue Xingkong (iMean), another brand, has also raised two rounds of funds worth tens of millions of yuan within half a year. Time will tell whether unicorns like Stability AI, which is behind the AI drawing platform Stable Diffusion, will emerge.

[Deputy Director of JD.com’s AI research institute] He Xiaodong says that at the moment, GPT (AIGC) entrepreneurship has two notable features: one is that from a research point of view, China will continue to explore algorithmic innovation on the technology front; the second is the industrial value, especially because the ability to generate text with unique experience and value is close to the point of being commercialized. In the future, we may need to consider specific application scenarios as well as issues like accuracy, especially when it comes to vertical expertise.

“Now is definitely a good moment for developing AI applications, and especially a good moment for applications to land. I’m reasonably optimistic about AI, and I believe the future of AI lies in the path of industrialization. More and more, I feel that there are more opportunities for application in industry than in academia.” He Xiaodong believes that AI technology will gradually move from a “workshop-style” mode of research exploration to delivering “industrial-level” projects or systems. At the moment, He’s team is actively exploring and developing technological products in AI voice interaction, multimodal intelligence, and digital humans.

AI Generation Is the Next Startup Trend

Yicai spoke with a range of investors and experts about what ChatGPT means for the AI market. Wang Yonggang 王咏刚 is the Executive Director of Sinovation Ventures AI Institute.

According to Wang Yonggang, the better ChatGPT gets at imitation and writing, the more this future risk deserves attention and needs to be dealt with in advance. Today's AI generation theory is not yet able to guarantee the logical correctness and rationality of the generated content.

Establishing an AI training process involving human domain experts and developing augmented learning algorithms for correctness may be a hotspot for AI research in the future.

The second concern is that AI and computer science professionals are struggling to stay calm. Wang says that in the face of ChatGPT, whose ability to hold multi-round conversations has dramatically improved, AI and computer science practitioners must hold off on worship with more restraint than ever; at the very least, they should honor the spirit of testing and verification, constantly explore the new models’ upper limits, and differentiate between real "memory cognition" of the model when generating answers and an "imitation games" based on feature similarity.

…

In the future, with continuous improvement of deep learning models, the promotion of open-source models, and the possibility of commercializing large models, AI-generated content (AIGC)’s development is expected to accelerate. In November 2022, Wang went to Silicon Valley and visited many investment companies, technology firms, and startup teams. He found that almost everyone in the tech world was talking about AIGC, as if startup ideas without AIGC packaging were not good projects and scientific research without the concept of AIGC could not lead to good papers.

But is that really the case? In subsequent communications with two OpenAI co-founders, Wang found that they would talk about their work plans and technical thinking in a very technical and pragmatic way, but they didn't know what AIGC really meant, which surprised Wang.

After some consideration, Wang says, “The founders of OpenAI, who practically created the field, don't really need to know new terms like AIGC, which are purely used to package techy concepts. What they need to study are the structure of large models, parallel training acceleration, neural network optimization and other specific technologies. Such people are the real creators and navigators.”

At present, [China’s] domestic AIGC world is red-hot. In Wang’s opinion, some of these entrepreneurs and investors, as well as most of those rushing to package AIGC into application products, are actually aimless followers who are not able to influence the general direction of technology. “I hope that followers will not become too hot-headed on the grand stage of AIGC

“We need more discernment after all: are the so-called ‘products’ you’re building ultimately beneficial to human progress, or are you just pumping more garbage into an already fragmented content ecosystem?"

ChatGPT Inspires Chinese Innovators

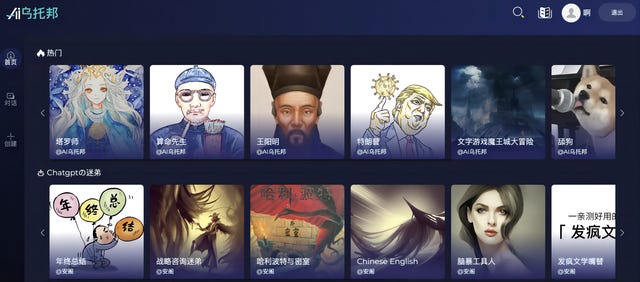

EqualOcean spoke to Professor Huang Mingjie 黄民烈, founder of the Tsinghua-incubated Lingxin Intelligence, an AI mental health startup. Its AI Utopia allows users to speak to AI-generated celebrity personas, as well as AI therapists and even fortune tellers. Lingxin’s earlier product Emohaa is an AI chatbot focussed on emotional support.

EqualOcean: What inspiration does OpenAI’s research bring to the AI generation field in China?

Huang Mingjie: I’ve summarized it into five points.

“AI 3.0”, represented by companies like OpenAI, are pursuing a path that’s very different from previous AI waves. This new path is steadier and more connected to the real world, and its industrial application will be more straightforward and deliverable. The distance between academic research and industrial application has been shortened and accelerated.

Platform companies like OpenAI, with underlying AI capabilities and user data, are more capable of leading the future of AI. Through the positive-cycle mechanism of "user interactions → data → model iteration → more users", the strong will always be strong.

Valuable research needs to further consider the needs and scenarios of real users. Academia’s continuous pursuit of new benchmarks is a waste of resources. From the perspective of benchmarks, InstructGPT models’ abilities are not very impressive; they may even be a regression. But the real user data is amazing, and it shows that academic benchmarks are very disconnected from real life. They are unsuitable for delivering AI research. As such, industry data that’s more open and widely shared should be a goal we strive for in the future.

The era of seamless AI-human interaction is about to arrive. At the moment, AI’s ability to generate conversations has made it so that conversations are a viable entry point. What we previously called “conversation as a platform/service” is no longer a pipe dream. But I still think that it’s a little too far-fetched to say ChatGPT will replace Google; it’s more of a very beneficial supplement to current search engines.

Academia and industry should strive together in the direction of more helpful, more truthful and more harmless AI research and applications. More helpful, in that the fruits of AI research should solve real-life problems and satisfy users’ real needs. Truthful, in that AI models should be able to generate trustworthy results, know what it knows, and know what it doesn’t know (this is very hard). Harmless, in that models should have values, adhere to societal morals and regulations, and generate safe results free of prejudice.

My AI Assistant is Better!

National Business Daily interviewed Li Di 李笛, the CEO of Xiaoice. Xiaoice is one of China’s top AI firms and spun out of Microsoft’s Xiaoice R&D team. Its “AI Beings” concept seeks to create digital humans and eventually personalized AI assistants. It currently runs the app 小冰岛, an experimental AI social media platform.

Li Di thinks that at the moment, the industry’s attention and excitement over ChatGPT has become a bit overblown.

"Why do humans get excited?" Li Di thinks that when people have a general expectation for something, but find that it far exceeds everyone's expectations after interacting with it, they will be surprised. "However, even if we only consider AI, there have already been so many surprises in recent years; earlier, when GPT3 came out, people were surprised but nothing happened; if we go further back, AlphaGo A won a game of chess against the world’s best player and everyone was surprised, but nothing seemed to have changed after that."

However, Li also mentioned that there are three things about ChatGPT that need to be looked at relatively rationally.

First, ChatGPT has great innovation: that is, it proves that new training methods on top of the original large model can better improve the quality of the conversation. Second, ChatGPT does not constitute a new iteration of a large version, but a fine-tuning of the previous one, which to some extent makes up for some of the defects of a large model with a huge number of parameters. "Even OpenAI defined it as GPT3.5, not GPT4." In addition, Li believes that the breakthrough of ChatGPT is mainly a research breakthrough.

As for whether ChatGPT, as currently imagined by the market in general, will immediately usher in commercialization and disruptive impact, Li thinks it is unlikely. "But that doesn't at all affect the fact that in recent years, especially after the large model idea came out, we are once again seeing a big change in the conversation, and everyone is moving forward on this path."

…

He also talked about the fact that when it comes to companies that mainly do large models, results often depend on the extent of their exclusive focus.

"What makes OpenAI different from other companies is that it is very focused on doing large language models, so it has invested a lot of time and effort. It has a lot of experience, but the accumulation of that experience isn't impossible to replicate."

Untrustworthiness and high cost become obstacles to commercialization. The most conventional format of ChatGPT answers is to first give a conclusion, then make a list of facts, and then deduce a conclusion through its list of facts.

According to Li, it does not really matter to ChatGPT whether the conclusion itself is correct or not. He used an example of a Q&A screenshot circulating online, in which ChatGPT answered the question of "Who should Jia Baoyu marry in Dream of the Red Chamber?” with “Grandmother Jia”. [They were cooler with incest back in the day, but not like this…]

Li further analyzes that according to ChatGPT's answer, it can be inferred that [researchers] emphasized cause-and-effect relationships when writing guiding Q&As for its training, and the large model focussed on learning such relationships. "[With some of the answers,] if you don't go through it particularly carefully, you will feel that it is a seemingly logical cause-and-effect answer, but in fact it is very badly reasoned."

Li believes that Xiaoice cares about the entirety of a conversation as well as what kinds of connections are established between the user and the AI after the conversation. "If someone thinks Xiaoice is quite amusing — not particularly knowledge-centric, but very interesting — and that they are willing to communicate with it and ask it questions when they have time, and especially if the answer is quite good, I will be very pleasantly surprised. That would be a very favorable state for the system."

But Li Di also admits that there are many cases in which Xiaoice generates plausible misinformation or directly takes the conversation elsewhere. For this reason, Xiaoice has a dialogue system setup with high flexibility. Li gives the example that when an AI system encounters questions related to sexual materials, gambling, illicit drugs, or pornography, it has to protect itself. The vast majority of large models, including ChatGPT when it notices that humans are asking questions with ulterior motives, answer straightforwardly: "I don't want to answer this question." That answer, in Xiaoice’s scoring system, would get a very low score.

In Xiaoice’s response strategy, instead of directly indicating to the user that it does not want to answer the question, it introduces a new dialogue. If the human user succeeds in starting a new conversation with it, the risk is automatically neutralized. Xiaoice also pays attention to whether the user engages with the new conversation. If not, the system will start trying to reduce the relevance of the answer. "We'd rather have the user think you're dumb and give up on attacking you or getting you hooked, than have the user think you're smart enough to block them and bolster their resolve to challenge you in a tougher way."

In Li's view, this is the trade-off that AI chatbots need to make, because human-machine interaction is never simply a matter of considering the relevance of answers provided and the completion rate of tasks; one also needs to consider possible directions for the next round of conversation.

ChinaTalk is hiring! We’re looking for an editor with strong Chinese language skills as well as someone interested in producing YouTube content (no past video experience required!)

Looking to raise your firm’s profile? Hiring for a China or tech policy-related role? Consider advertising on ChinaTalk to reach an engaged and elite audience of over 20,000 policymakers, journalists, investors and corporate decisonmakers. To connect, just respond to this email.

Some AI Art

I fed midjourney Auspicious Cranes, which features a famous inscription from Emperor Huizong.

Hope Sam Altman keep up with your posts ...