Compute and the Future of US-China Relations

“The chips stop going to China — but how do we actually make sure they stop using those cutting-edge systems? How do you want export controls on cloud computing?”

We’re back for more of my conversation with Lennart Heim and Chris Miller on compute.

This time we discuss compute’s relevance to the future of US-China relations. We also touch on:

The feasibility of chip smuggling, in light of the recent chip export controls;

The uneasy alliance between technology and morality;

Pertinent parallels between energy-sector and chip regulations.

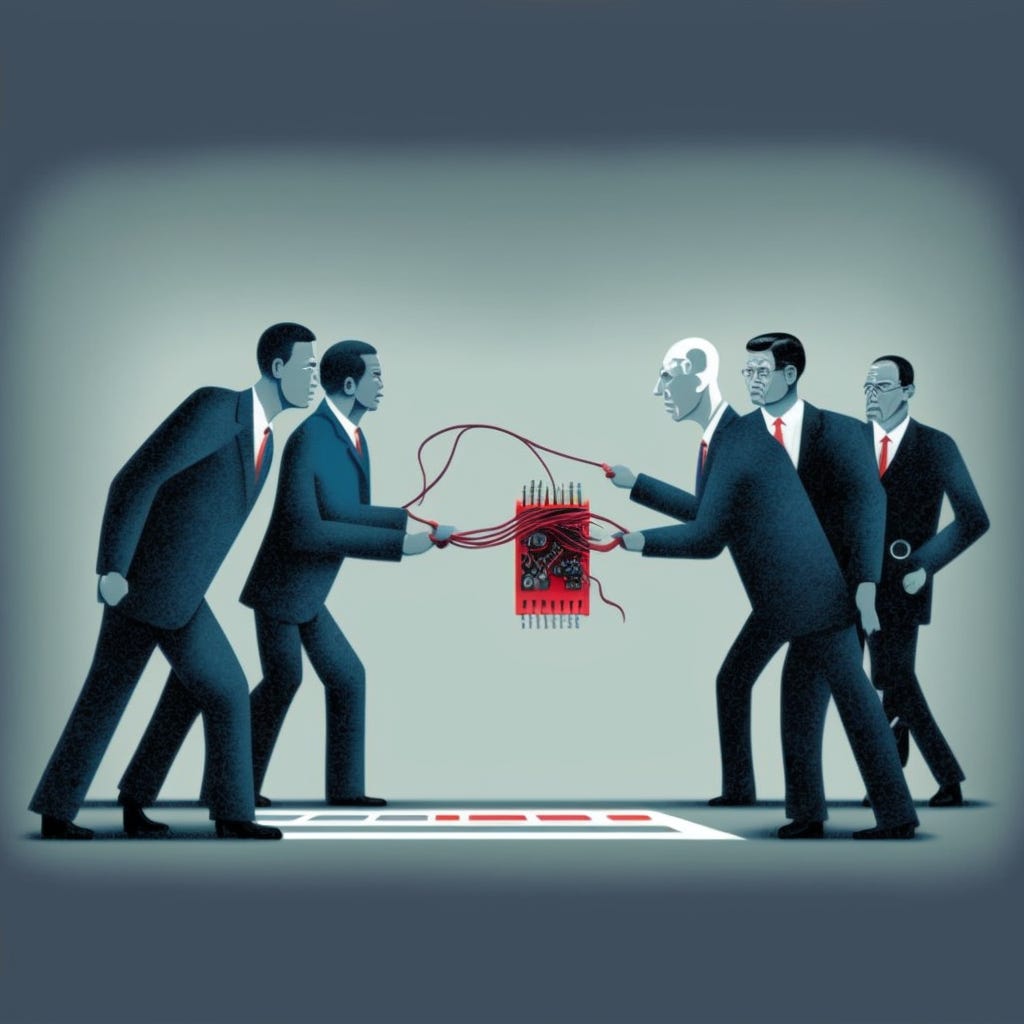

US and China Compete for Compute

Jordan Schneider: So this is where we get into the competitive dynamics. I’m sure there will be researchers out there making the argument that, “Look, this is the new fire — the more you try to shape and contain and have controls and be careful, the easier it is for researchers who don’t face those constraints to progress ahead.”

And particularly when you have this open-closed dynamic, where a more open system will allow more people to work on, iterate, and progress it — maybe harder to do it on the training side, but certainly on the inference side — you could see a universe in which this sort of thing allows nations or researchers inside of nations who are less stressed out about these sorts of things to make up time and capability.

Generally, what is the right way to think about which parts of the triad are easiest and hardest to diffuse?

Lennart Heim: I think that’s a strong argument. We have algorithms, we have data, and we have compute. If you look at data, there are actually open-source datasets. For example, if you look at a dataset which was trained on civil fusion, if it’s not a closed dataset, you guys could be using it right now, as could anybody else in whatever country you want to name now.

If we look at algorithms, it’s sometimes the same. There’s a spectrum of how you can publicize algorithms. You can just not talk about them. You can write up a paper where you kind of describe it, but the code is not open source. And the most extreme example is, “Yup, the code is open source — here it is.” This is the case for lots of stuff in machine learning — with all of the good benefits: where tensorflow, pytorch, all the fundamental building blocks of machine learning systems are out there and open source so you can use them.

What those two things have in common is they’re digital. So if I know how to hack your computer and you have a secret dataset, I press command Z, command V, and here we go — I have it. Can you stop me from doing that? It’s going to be pretty hard, because I just created a copy. It’s a non-rival good: people can use it at the same time. The same goes for algorithm, and the same goes for data.

But there’s a big exception for compute. If you are running an algorithm on your computer right now, I cannot use it. There are just fundamental physical limits — given the amount of compute, there’s a limit on how many algorithms can be run right now in the world.

So this makes compute interesting — because you cannot simply hack it and steal it. There are some ways you can technically hack a data center and then run and train your system there. But what you should notice — it turns out that by drawing energy, your GPUs will be at full utilization, and you won’t be able to run your system right.

There’s a neat way of thinking about diffusion: what is getting diffused depends on the publication norms of researchers. If you think about people hacking and stealing those kinds of algorithms — it’s really hard to prevent someone from hacking data and algorithms. Usually you can hack into companies, even the best-secured ones.

So I’m pretty excited about compute as a resource, which you cannot digitally steal as if it’s a physical thing — and not to forget: next to those fundamental compute properties, all of the properties of the supply chain, of the state of affairs, and how everything is split up right now.

Jordan Schneider: But you can still steal physical things — it’s just a little bit harder. We’ve talked a lot on ChinaTalk about export controls — and of course, one of the flagship pieces of the Biden administration’s export-control regime is this restriction of the highest-performance compute from being imported into China.

I think we’ve covered pretty in-depth on this show the challenges that China will face in domesticating the manufacturing of those chips. However, there are millions of H-100s sold every year, and diverting some of that supply down into some Guizhou server farm isn’t the hardest thing in the world to do.

So the question, then, is, “Can you contraband your way into having enough compute?” Not necessarily to do things with heavy national-security implications — say, having 2,000 GPUs model a nuclear weapon. But, if we end up on a technological timeline where you need to run $500 billion training runs, can kind of gaining access to that compute in a sneaky way be a potential solution for China?

Lennart Heim: That’s a good question. It turns out you need a lot of chips for that. I think it was recently on the news that somebody tried to smuggle some chips into China with a fake baby bump.

Jordan Schneider: But that story was misreported: the Chinese government caught her — and that was someone trying to evade import taxes. That’s very different than a state-run effort to obtain strategic compute.

Lennart Heim: Oh, I agree. I’m just saying it’s an example of how there’s a limit to how many you can put in your baby bump. Assuming they would just go through, say, the airport in San Francisco, that the TSA would say, “Okay — there’s a limit to how many A-100s you can put in your backpack.”

Jordan Schneider: But is there? I don’t know. A lot of cocaine comes in and out of every country in the world. I think you can figure this out, especially if you’re a nation-state.

Chris Miller: I think that’s the wrong way to think about it. A thousand chips do not make a data center — nor does 10,000. There’s a lot more that has to happen beyond just getting chips into a country. And I think the interesting part of this is that data centers are big buildings; you can see them from space; and the numbers that we’re talking about are actually quite small. So it’s a much more knowable problem, knowable question as to which chips are ending up in a given building than, say, tracking cocaine flows into the United States — because the cocaine ends up in a million different consumers, whereas the chips end up in maybe a hundred different data centers.

Jordan Schneider: But it can go from narrow to wide to narrow. You can have Intel selling it to a hundred distributors, which then go to 10,000 or 100,000 different places. And even if it ends up in one place, I guess the Air Force could bomb it in a war. If America wants to turn out the lights at these places, they can — as if it were an Iranian centrifuge. But I don’t know if that necessarily solves the issue here, Chris.

Chris Miller: Well, I think in terms of understanding how things are entering China and the networks that are delivering them — the fact that you’ve got a limited number of end customers makes the tracking problem a lot more feasible.

Lennart Heim: Just in general, maybe the big difference with cocaine is that the semiconductor supply chain is really concentrated and really complex. If I wanted to make cocaine — albeit probably not in Switzerland [ed: then again…] — I could probably get it done. But building A-100s — I don’t know if I could get it done by the end of my lifetime. What we eventually see: if we look at all the Nvidia GPUs or all the Nvidia A-100s, or Google’s GPUs — they’re coming out of fabrication plants, they’re coming out of TSMC.

We are using compute right now. This call is running on some computer — I don’t know where it is, but it’s not at our home. But it’s also running on a server. So actually, you can be, say, China and train a model on compute just sitting in Arizona via cloud computing.

This is opening up a really interesting question: the chips stop going to China — but how do we actually make sure they stop using those cutting-edge systems? How can we make sure that, in the future, they’re not going to be using AWS’s or Microsoft Azure’s compute? That opens up a new domain: how do you want export controls on cloud computing?

This is an open question to me. How are we going to go about this whole cloud computing thing and make sure that compute does not get misused? If I don’t have A-100s at home, why wouldn’t I just use cloud computing? Why wouldn’t the Chinese government do the same in the future?

Chris Miller: Well, there’s an interesting Chinese government policy decision as well — to what extent do you allow or not allow the transfer of relevant data abroad?

Lennart Heim: Right. I think what we historically have seen is that they mostly use their own data centers for data privacy reasons; and they will continue doing so because, at the moment, they still have the A-100s. But if we look five years into the future, and they don’t have the new Nvidia chips, the H-100, there might be some desire to use our cloud computing technology.

If Moore’s Law continues, there will be a strong desire to use new computing technologies, and then a desire to access them via cloud computing legally. Or maybe you’ll start setting up some offshore entities which are registered wherever.

There are lots of things we should look at — for example, who are AWS’s customers? Maybe they just want to implement a really strong customer scheme. And I think right now they would not be allowed to give cloud computing to companies on the entity list, right? They’re already forbidden there. But how do they enforce it? I don’t know. I guess they need a law.

Chris Miller: And also there are Alibaba cloud facilities outside of China — which, because they’re outside of China, can all legally buy controlled GPUs.

Jordan Schneider: Two points. First: the export controls explicitly do not prevent Chinese firms that aren’t tied to the military-industrial complex in a tight way from using server farms in Singapore. That would be a very tricky secondary sanction to enforce. But it’s still totally fine right now.

Second: we’ve seen in the past few months Nvidia and Jensen Huang basically saying, “Look, our latest chip — we retooled it, and it’s 97% as good, and we’re still going to sell it to China, and we’re not going to lose a lot of sales.”

That’s not going to cut it five years from now — when the latest tech can’t squeeze past the 2022 export controls in a way that satisfies customers and creates globally competitive products. So, if and when that happens, that’s really when the rubber is going to hit the road on this. And Chinese firms are going to be pushed out into wanting to get their compute from outside China for the latest, greatest, most efficient, cheapest-to-run technology.

Rights and Responsibilities of Compute

Lennart Heim: Indeed. I think there’s also a new notion: more compute means more responsibility. The one who’s providing the compute needs to check what it’s being used for. And this is, in my opinion, a really interesting and important development.

We’ve seen over time: people first thought Facebook is just software, just a platform. But the more we moved onto it — well, it turns out Facebook is more than just a platform: you’re actually responsible what happens to our society because of this.

I think the next move is: well, it turns out you’re providing compute — which can be used for many, many good things and for many, many bad things — so you should bear responsibility for it. And if you think deeply about this, cloud providers are probably next.

One example is when AWS decided to shut down Parler. Amazon decided, “Actually, we are not doing this. This is not our type of platform. We’re shutting it down.”

I’m pretty excited about this general notion where compute means responsibility. This is the world we should live in right now. You should bear responsibility.

Chris Miller: What are the other examples we have — besides Parler — of that happening? Does AWS have a content advisory board or something that tells them?

Lennart Heim: There’s also the example of Silk Road.

I’m in Switzerland — they have a bunch of data centers here in bunkers, and they maintain lots of privacy. Well, it turns out that they’re running lots of tor and dark web and illegal drug platforms.

If you think about illegal websites, AWS and Microsoft Azure aren’t hosting them — they’re checking on them to see if you violate their platforms’ policies. Drug markets are one example where people are not excited about them and don’t want to affiliate with them. “But technically I’m just giving them FLOPs [floating-point operations] and some gigabytes, right?” But it turns out that those FLOPs and gigabytes will run some shady platform eventually.

Stable Diffusion, in the future, might be misused for creating nudes or deep fakes. We’ve seen images of people being misused to create revenge porn. I would expect that AWS and others are not excited about hosting these, and I think they should not be hosting these. So those people should not be allowed to deploy those models.

That’s an example of where somebody uses machine learning models, needs to deploy them on a web server to run them at scale and efficiently — but it’s the usage which you’re not excited about.

Right now, we use Stable Diffusion to create lots of nudes and deep fake videos. But maybe there’ll be an example in the future where AWS or Microsoft Azure would say, “Nope, this is against our content policy” — which I would encourage them to do.

Jordan Schneider: It’s interesting because, the lower down the stack you go, the less folks have been stressed out about these sorts of things. Compute has been so far down that Nvidia has not thought about this sort of thing at all.

Meanwhile, the higher up you go and the closer you get to interfacing with people, the more that content policy comes into play. So for the recent history of the internet, this has been the job of consumer-facing platforms like Facebook, Google, and Twitter to draw the line. And going down to the Cloudflares and AWSs of the world, they have maintained for a long time, “Look — this is basically the problem of everyone else, and we’re not going to stress out about it.”

Though maybe the Trump era was a bit of a turning point for this — those down the stack started to draw circles smaller than what a Facebook or Twitter would draw. But I remember Cloudflare at one point was like, “We’re going to stop hosting neo-Nazi, KKK stuff.” Okay — but once you do that, there are lots of other bad things, and you don’t rate things on a scale of bad.

So I don’t know where Nvidia is going to draw the line. Is it going to draw the line at nuclear-bomb modeling, just WMDs they’re not cool with? Frankly I’d be surprised if they figured out a way to stop people, from a chip perspective, from doing revenge porn.

Next we discuss:

Lennart’s take on why cloud providers are ultimately the only entities which can assume responsibility for how their technology is used;

What AI policymakers can learn from regulatory models applied previously in other domains, like nuclear energy;

How sublinear scaling will challenge Moore’s Law in the near future.