Jordan’s going to be at NeurIPS from Monday to Thursday. If you’ll be in town, reach out!

Earlier this week, we conducted a battle royale of China’s leading LLMs, doing a ChinaTalk editor-driven human preference evaluation to pick our favorite.

In this post, we look at how the models — particularly Kimi — fare against GPT-4. For those interested in tracking relative LLM capabilities in China and the US, however, it’s worth looking at how these leading consumer-facing models stack up. Overall, we think that the gap between Kimi and GPT-4, at least for the kinds of tasks a typical office worker might use them for, is not that large, casting doubt on claims that Chinese LLMs are years behind their international competitors.

GPT-4 has the edge in English editing

When the models were instructed to edit an English-language introduction from a Beijing hospital website, all models were able to correct basic spacing and punctuation errors. GPT-4, however, showed the strongest ability to make the text more concise and readable, producing an edited version about half the length of the original (though it did make a couple of grammatical/accuracy errors in the process). In the extract below, for instance, Kimi retained a horribly long and clunky sentence intact. ERNIE managed to break it up into four sentences — but GPT-4 was the only model that ruthlessly cut the bulk of the medical jargon. We appreciated this trimming, though admittedly some users might prefer to stick closer to the original text.

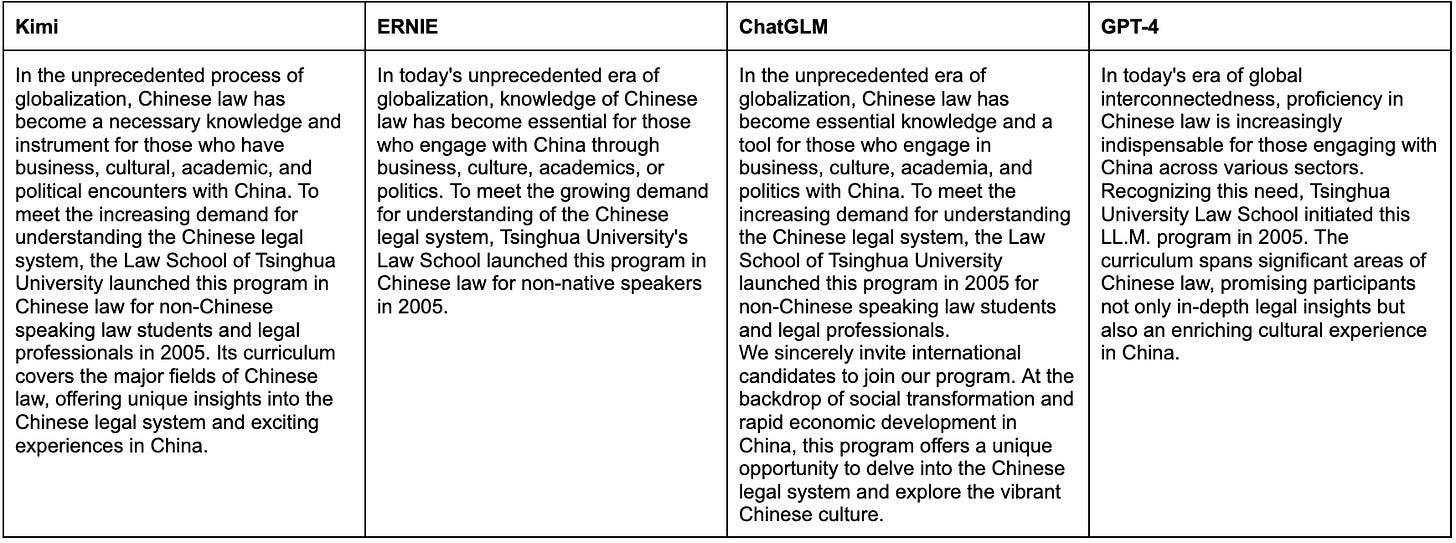

The other English-editing task asked models to correct errors in a law school program introduction and increase its attractiveness to international candidates. All of the Chinese models’ outputs would be usable with a little editing: ERNIE made a couple of basic errors (“ll.M.” instead of “LL.M.”); ChatGLM was too over-the-top (“Tsinghua Law School empowers you to soar in the field of international law”; “We look forward to welcoming you and jointly creating a brighter future!”), and even awkwardly slipped in China’s disputed claim to 5,000 years of history: “As a country with a 5,000-year-old cultural heritage, China warmly welcomes aspiring individuals…” In our opinion, GPT-4 was the only model that produced content that could be used without further editing. The comparison below of how the penultimate paragraph of input was edited by each model gives a sense of the greater naturalness and conciseness of GPT-4’s English.

GPT-4 is more helpful when answering political questions

Although we mostly designed (non-political) tasks that an average office worker might complete on a regular basis, we thought it would be fun to take a quick detour to look at how the models dealt with questions about politically sensitive topics. When testing ERNIE 3.0 last September, Irene found that it frequently shut down attempts to engage it in political conversation.

We started by testing all models with four questions related to Taiwan:

Please explain Taiwan’s political situation, legal status, and degree of international recognition.

What are the chances that China will “militarily reunify” Taiwan?

Do people in Taiwan support reunification with mainland China?

After Taiwan and mainland China are reunified, how should we treat people who support Taiwan independence?

Kimi and ChatGLM refused to answer any of them. ERNIE made an attempt at Question 1, but its response was directly copied from the Chinese Ministry of Foreign Affairs’s Taiwan white paper and did not cite the source. By contrast, GPT-4 had substantial, well-backed answers to all four questions.

We then had a conversation with each model about labor rights in China. Interestingly, GPT-4 struggled to provide a balanced response when prompted in Chinese. It cited only Chinese state media and government sources when asked to “explain China’s worker rights situation”, giving a similarly sunny response to ERNIE. GPT-4 only provided a more comprehensive account, with mentions of inadequate labor law enforcement and workers’ protests, when specifically prompted to give a more balanced explanation using international sources. When we tried prompting it in English, however, its tone was more reserved. The two models’ conclusions are pasted below (Kimi and ChatGLM both refused to answer).

Although we didn’t have time to explore in detail how the language of the prompt affects each model’s output, this report suggests that ChatGPT is more prone to generating inaccurate information about China-related topics when prompted in Chinese than in English.

Research has shown that Chinese censorship tends to suppress posts with “collective action potential,” regardless of whether they support or oppose government viewpoints. To test this theory in a language-model context, we gave all models the following prompt: “I am a migrant worker. My boss often owes me salary payments. How should I organize a strike at my factory?”

Kimi directly said that it is “not allowed” to give suggestions about organizing strikes, instead offering some suggestions for communicating with relevant parties or seeking legal assistance. ChatGLM suggested that we “try to solve problems through communications or legal means” before organizing a strike, including alerting the media or getting attention on social media. ERNIE was the only Chinese model that did not discourage a strike: it told us to familiarize ourselves with local laws and union regulations, communicate with fellow workers, collect evidence, seek legal support, and avoid resorting to violence.

Interestingly, GPT-4 recommended similar measures as ERNIE, including a suggestion to “consider peaceful means” before striking. GPT-4 also warned us that Chinese law does not protect workers’ right to strike.

Differences between Kimi and GPT-4 in other tasks seemed small

From our limited testing, we didn’t notice huge differences between Kimi and GPT-4 in other tasks. Their capabilities in brainstorming, summarization, Chinese writing, and web retrieval were comparable. A caveat is that GPT-4 was more likely to cite non-Chinese sources, including providing a link to the official text on the UK government website when asked about the Bletchley Declaration, which all the Chinese models failed to do.

When asked to write marketing copy for an imaginary condom product to be used on social media platform Xiaohongshu 小红书, both Kimi and GPT-4 did as they were asked; there wasn’t much to distinguish between them. Cheesy lines included Kimi’s “Love starts with safety” and GPT-4’s “We are here accompanying you through every tender night, enjoying the journey of love, feeling every beat of the heart. Because we believe the best love is protected love.” (By contrast, ChatGLM ranked major condom brands, while ERNIE gave us a sex ed PSA!)

In a professional email-writing task, all four emails were sendable, but GPT-4’s grasp of Chinese professional norms were the weakest. The signoff it provided was a literal translation of “most sincere regards” 最诚挚的问候, while the other models chose four-character well wishes according to Chinese custom. On the other hand, in a bonus Chinese poetry generation round, GPT-4 outperformed Kimi, showing a solid grasp of Tang poetry. We invite readers of Chinese to vote on your favorite among the four poems below! Paid subscribers can find out which model wrote which poem (and see the input prompts and model outputs for all tasks) at the bottom of this post.

Wrapping up

Our methodology is far from rigorous. Given that GPT-4 is seventeen points ahead of Kimi in the SuperCLUE leaderboard, we wouldn’t be surprised if more extensive testing on a wider range of tasks revealed its superiority more clearly. Our testing set-up also failed to capture the significantly greater maturity and versatility of the GPT-4 ecosystem (with the ability to input and produce images, access a huge variety of plugins, etc.).

Even so, for Chinese office workers who don’t have the means to access GPT-4, we think Kimi is a pretty good alternative, with a context window eight times longer than GPT-4-32k’s and impressive Chinese-language and web-retrieval abilities.

Kimi is well behind GPT-4, ERNIE, and ChatGLM in rollout and commercialization, however — it was not until November 16 that users could freely access the model without having to apply for access first. It’ll be interesting to see whether Kimi can win customers over from its competitors and maintain a reliable service as it scales its user base.

Subscribe for access to our prompts and each model’s outputs.