Announcing: the ChinaTalk book club! We have upcoming shows with the authors of To the Success of Our Hopeless Cause: The Many Lives of the Soviet Dissident Movement, To Run the World: The Kremlin’s Cold War Bid for Global Power, and Learning by Doing: The Real Connection between Innovation, Wages, and Wealth. We’d like to encourage you to read along with us in preparation for the shows!

Manus, a Wuhan-developed AI agent went viral this weekend. Guests Rohit Krishnan of Strange Loop Canon, Shawn Wang of Latent Space, and Dean Ball of Mercatus and Hyperdimensional join to discuss.

We get into:

What Manus is and isn’t,

How China’s product-focused approach to AI compares with innovation strategies in the West,

How regional regulatory environments shape innovation globally,

Why big AI labs struggle to build compelling consumer products,

Challenges for mass adoption of AI agents, including political economy, liability concerns, and consumer trust issues.

Listen now on iTunes, Spotify, YouTube, or your favorite podcast app.

What is Manus?

Jordan Schneider: This past Friday, Monica — a startup founded in 2022 — launched a product called Manus. The launch was done through a video in English. Manus is ostensibly an AI agent that you tell to do something, and it can interact with the internet as if it were a person clicking around to book a restaurant, change a reservation, or potentially one day take over the world through Chrome. The rollout was remarkable, with hype building dramatically over the weekend. The product seems to be more competitive than similar offerings we’ve seen from OpenAI and Anthropic. With that context, Shawn, what were your first impressions of what Manus was able to build?

Shawn Wang: My first impression was that it’s a very well-executed OpenAI Operator competitor. It can effectively browse pages and execute commands for you. In side-by-side tests that people in the Latent Space community were running with Operator versus Manus, Manus consistently came out on top. This is backed by the benchmarks they hit on the Facebook Gaia benchmark, which evaluates agents in the real world [which is a public benchmark]. The product is very promising. I’m somewhat suspicious about how well the launch was executed with influencer-only invite codes and people writing breathless threads. We’ve seen this many times in the agent world, but this one people actually seem able to use, which is nice.

Rohit Krishnan: What interested me most was that all our previous conversations about China focused on models — how good their models are, how much money they have, how many GPUs they possess, etc. Now we’re talking about a product. The closest thing to a product from DeepSeek was their API, which is really good with an exceptional model, but the interface was just the same old chat interface. We’ve been discussing agents extensively for a long period. In the West, we still live under the umbrella of fear regarding AI agents, which is why most models aren’t given proper internet search capabilities. It’s amazing to see that the first really strong competitor has come out of China — arguably better, perhaps slightly worse, but definitely comparable to Operator. They made it work with a combination of Western and Chinese models, using Claude and fine-tunes of Qwen underneath. That changes the product landscape as far as I can see.

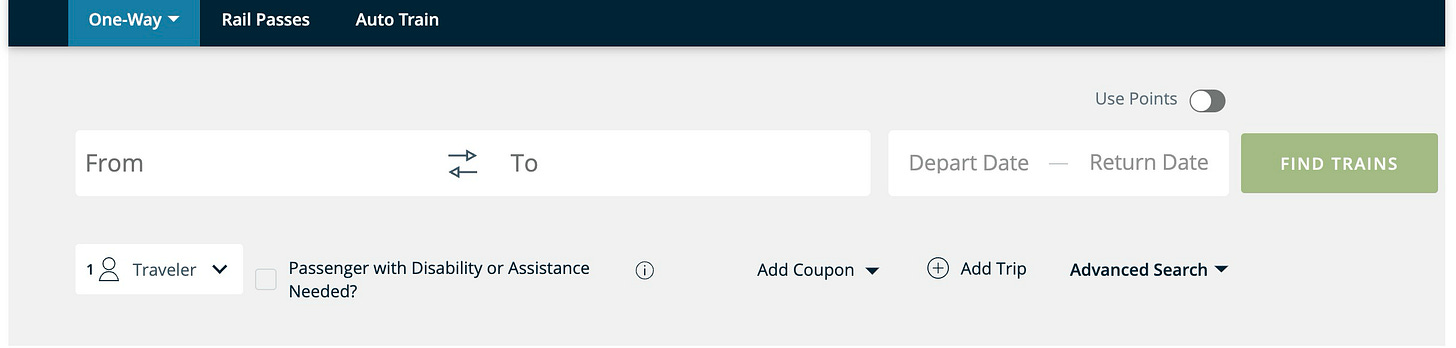

Dean Ball: I didn’t get an invite code myself but was able to use someone else’s account briefly. I ran it through my favorite computer use benchmark that I’ve organically discovered — trying to book a train ticket on amtrak.com. Operator consistently fails at this task, but Manus succeeded on its first attempt. That says something significant! Many other demos I’ve seen of the product seemed quite impressive and like things that would surprise me if I saw Operator doing them, based on my perception of Operator’s reliability and competence.

This isn’t a story about some shocking technological innovation or about DeepSeek’s unfathomable geniuses, as Jack Clark says, discovering some new truth about deep learning. This is good product execution in a style relatable to Y Combinator circa 2015. It’s a well-built product that works effectively, though it has flaws and glitches like all computer use agents do. What’s interesting is that this represents an advancement of capabilities. DeepSeek might be a more impressive technical achievement than R1 and V3 in some fundamental sense, but DeepSeek R1 wasn’t better than OpenAI o1 — at best, it was comparable. Manus appears better than what I’ve seen from Operator, or at minimum comparable but I think unambiguously better. Thinking about why that’s the case is a really interesting question.

Jordan Schneider: Shawn, you just hosted a wonderful week-long AI agent conference in New York City. What’s your take on why no one in the West got to this first?

Shawn Wang: There are many skeptics of agents, even among the agent builders themselves. People range across the spectrum in terms of the levels of autonomy they’re trying to create. The general consensus is that lower levels of autonomy are more successful. Cursor being an agent is now worth $10 billion, whereas the people who worked on Baby AGI and Auto-GPT are no longer working on those projects.

Working on level four or five autonomy agents hasn’t been a good idea, while level one and two — more “lane assist” type autonomy agents — has been the better play. With the rise of reasoning models and improvements in Claude and other systems, that is changing every month. The first one to get there, like Manus, would reap the appropriate rewards.

Dean Ball: That intuition you’re describing is definitely something I’ve heard too, and it’s probably right. For a variety of reasons, I won’t be using Manus on a day-to-day basis. Part of that involves security concerns, but even without those concerns, I’m not sure this is a product I would use regularly compared to an agent like OpenAI Deep Research or a Cursor-style product. Those have much more genuine day-to-day utility.

As an investor in this company, I would be concerned that Manus will be, to use Sam Altman’s terminology, steamrolled by the next generation of computer use agents from the big labs. That’s very possible. From a practical business and technological perspective, this makes sense to me.

Rohit Krishnan: The key question I keep pondering is why Manus wasn’t built by a YC company six months ago. We’ve internalized the fear Dean talked about — that anything we build will get steamrolled by Sam Altman. In some ways, that’s correct. We all personally know code assist companies that emerged a couple of years ago and went bankrupt when the big labs effectively took over.

However, I have this heretical notion that despite everyone talking about agents, nobody at the large labs cares enough about them. They don’t seem interested in building products beyond making models smarter and letting them figure out products on their own. We’re stuck in this weird situation where I have access to every large model in the world, but half of them can’t do half the things because nobody has prioritized those capabilities.

O1 Pro can’t take in documents. O3 Mini couldn’t take in Python files or CSVs. Claude can’t search the web. These weird restrictions exist partly from AI safety concerns and partly because nobody has bothered to add these features.

One significant benefit of something like Manus is that people are actually trying to build useful agents for real-life tasks, like booking Amtrak tickets — which is a great evaluation benchmark. This pushes the labs or anyone else to say, “We should probably try to do this.” We can’t just throw up our hands and wait a year hoping the labs will build the next big thing.

The Western success story is effectively Perplexity — the one company that did what the labs would have been closest to doing but never did, and found success. Beyond that, when thinking about other agents we normally use, I can only realistically name Code Interpreter from a couple of years ago and Claude Code, which just released. Both are stripped-down versions that do a few things but still can’t handle basics like search.

When I look at Manus, what stands out isn’t just that they made an agent ecosystem work using external or combined models, which everyone expected would happen. More importantly, they actually went for it. There’s a price to pay — you have to try it. You can give it browser access, let it work for four hours, and get something useful back. Unlocking this capability is important from a product perspective.

Jordan Schneider: I’ll give one more perspective as well, which could be a fun US-centric observation. In the US, we’re very interested in B2B and developer tooling, especially in Silicon Valley. We really love developer tools, building for developers because we feel the pain. In China, there’s perhaps more B2C focus, which actually works to their benefit in terms of finding good use cases.

Rohit Krishnan: What is the previous large software success story from China that took over the world? There’s TikTok, and that’s essentially it. WeChat is amazing, but nobody uses it outside China — maybe in parts of Southeast Asia. Banking software emerged, but nobody really adopted it. Alibaba Marketplaces exist, but they haven’t permeated the West in any meaningful sense.

This might be an unconventional statement, but AI is one of those domains where you can build amazing AI agents using existing models from anywhere in the world. I’m glad we’re starting to see that happening.

Jordan Schneider: It was remarkable how this company, with both its browser and its first product Monica — a ChatGPT-like search browser add-on — targeted foreign users first. That’s notable because running Claude is illegal in China, which makes development difficult.

Reading interviews with the CEO over the weekend, he stated essentially: We’re not really trying to take on the big labs, but we think there’s an opportunity and a big market here. It was somewhat sad reading when he alluded to the politics of AI: “I've come to understand that many things are beyond your control. You should focus on doing well with the things you can control. There are truly too many things beyond our control, like geopolitics. You simply can't control it—you can only treat it as an input, but you can't control it.”

Frankly, I don’t think Chinese AI agents will have much longevity in the US market without hitting some severe regulatory headwinds. However, their skill at playing the global influencer marketing game to generate this hype cycle reflects a real fluency in digital marketing. The fact that they could play this game better than any Western agent competitor — except for Devin, which tried but faced its own challenges — is remarkable. There hasn’t been another major attempt at this over the past year and a half.

Dean Ball: I would go even further. When the first Devin demos appeared, people exclaimed, “Look how cool this thing is!” Then the bubble burst when people realized it was GPT-4 with prompt engineering and scaffolding.

The Western AGI obsession makes us want to conceptualize this as one godlike model that can do everything, and we implicitly dismiss product engineering and practical applications. You see that reflected in public policy, which is obsessed with big models, giant data centers, and similar infrastructure. Those are the only things we seem to take seriously and value.

I’m a deep learning optimist — I’m not going to tell you AGI doesn’t exist or is a Gary Marcus fiction. I’m not in that camp at all. But the AGI obsession has developed into something that feels like a perversion, distracting us from opportunities lying right in front of us.

I’m not necessarily saying Manus represents that opportunity, but there are thousands of possibilities where cleverly stringing together different AI products and modalities could yield interesting results. We just don’t see much of that happening. A year ago, I was more inclined to say, “Well, it takes time,” but a year later, I find myself less willing to make that excuse.

Structural Factors Driving the AI Product Overhang: Why Big Labs Don’t Do Product

Rohit Krishnan: Shawn, what is this? Are the VCs dumb? Are the founders dumb? Are there actually not pennies to be picked up off the ground?

Shawn Wang: There are, and the VCs have woken up to it. I started writing about the rise of the AI engineer two years ago, and now there are VPs of AI engineering at Bloomberg, BlackRock, and Morgan Stanley — they just spoke at my conference last week.

People were very dismissive of the GPT wrapper, viewing it as just a thin layer over the LLM. Now the perception has almost flipped, where the model is the commodity and everything else on top of it is the main value and moat of the product. This is why I started talking about AI engineering, and I think it’ll be a growing job title. It’s what we orient my conference and podcast around.

It’s music to my ears. I’ve been saying this for a while now, and the VCs have caught up. It’s just harder to fund because you can’t just say, “Here’s the pedigree of the 10 researchers I have. Give us $300 million.” Now you have to actually look at the apps and see if they’re well-engineered and fit the problem they’re trying to solve, whether B2C or B2B. That’s much more difficult than throwing money and GPUs at talented researchers and letting them go for it. That approach caused Inflection AI, Stability AI, and other mid-tier startups to burn around $100 million each.

Rohit Krishnan: That’s back to the SaaS era in some sense. You suddenly find a new vertical niche where you can build something, spend time and effort, learn about the specific problem you’re solving — not just intelligence but something more targeted, B2C or B2B. Then you have to tackle it and solve it.

Shawn Wang: The interesting thing about this SaaS transition is you’re charging on value and not on cost, and the margins between those approaches are enormous. Many of us in Silicon Valley realize that if you develop your own models, the next one that comes out is probably open source from China and better than yours. So where’s the value in that?

Everything’s being competed down to cost. Anthropic offers Claude at cost. OpenAI has a small margin, but every other GPU provider serving open source models is just providing at cost because they’re trying to capture market share with VC money. Nobody’s making a margin here.

You contrast the $200 versus the potentially $2,000 or $20,000 a month agents you can offer, because you’re competing against human labor and human thinking time, which we are all limited by. The economics start to really work out. You could start charging for your output instead of charging for your cost of goods sold. That is fundamentally a better business.

Rohit Krishnan: Speak for yourself, Shawn.

Shawn Wang: The fact that you could just start charging for your output instead of charging for your cost of goods sold is fundamentally a better business.

Rohit Krishnan: You wrote a very cool thing about Google’s awkward struggles to make products that people use. What is stopping the big model makers from starting to do things they can charge value-based pricing for? Is it just that they don’t need to and have their hands full making AGI?

It does seem that just selling tokens isn’t going to make you money in the long run. It’s funny because if you’re one of the large labs, if you’re Sam or Dario, you don’t particularly care about that since you already have so much money coming your way. Anthropic just raised $60 billion, OpenAI is valued at $300 billion. These are astronomical figures.

We’ve normalized these numbers in conversation, but they’re absurd by any stretch of imagination. $300 billion is bigger than Salesforce. It’s insane to think about for a company. Why are they getting that money? Because they want to build AGI. Why do they think they can build AGI? Partly because they’re true believers, partly because they have the best research talent in the world who wants to build AGI.

What happens if you tell that research talent that they’ll be working on building agents for awhile? Many of them quit. Arguably, many did. In a weird way, it’s only in larger places like Google where you can potentially have a large enough contingent of people try some unusual approaches and build cool stuff.

They did create interesting products — NotebookLM is actually really interesting. It was a cool new product, new modality, new way of interacting with information. I am surprised that we didn’t see more of it. In typical Google fashion, it just kind of disappeared after a while. They have Colab, which is an interesting product that’s languishing in a corner somewhere.

Everything Google does involves creating a very interesting first product and then slowly killing it by cutting off the oxygen supply over the next five years. For somebody to care deeply about building a product here, it has to start right at the top. It has to come from a mission, because the argument against building a product — the engineers saying, “Just wait a year and everything will get solved" — is really seductive.

Safety, Liability, and Regulation

Shawn Wang: You really need somebody who has a Jobsian level of ability to push back and say, “I don’t care what you guys think. We need to actually build something that really works here.” That’s not a muscle that any of these companies have because none of them have built products. Arguably, the thing that kicked it all off, ChatGPT, was built as a research preview. What we are doing is all being okay with playing around with research previews that consistently sneak their toe in and pretend they’re a bit of a product, but they’re not really.

Rohit Krishnan: Let’s fast-forward to the near future when agents can do more economically useful things than book you a train ticket. Should we start with the safety angle? It’s wild if I’m going to let something exist as me on the internet or in my workplace and I’m responsible for it. Maybe Manus is responsible? Maybe OpeningEye’s responsible? Maybe the AI engineer who goes to Shawn’s conferences is responsible?

This is a very weird world where it’s not just Jordan Schneider as an AI-enhanced worker using chatbots, but Jordan Schneider letting go a little bit and having these automated minions exist under my aegis but also not.

Dean Ball: I haven’t checked their website thoroughly, but I would be very surprised if Manus or the company that built it has a safety and security framework, a responsible scaling policy, or has commented on the EU code of practice.

Rohit Krishnan: I actually looked for this. I could not find one thing that the CEO has said in any relation to any safety discussion or question.

Dean Ball: This thing doesn’t have any guardrails. I don’t think it’s a consideration for them. In some sense, that’s probably part of what makes this better than Operator or Claude computer use, because Anthropic and OpenAI have both legitimate business incentives and internal stakeholders who won’t let the company ship things with no guardrails.

There’s reputational risk. If OpenAI had released something like Operator with zero guardrails, you’d be looking at state attorneys general investigating you, and the FTC and others coming after you, just as they did with ChatGPT. The tech industry is pretty risk-averse on things like this because it’s an inherently risky endeavor.

Those are market incentives, because you shouldn’t be incentivized as a consumer or business user to throw agents into the wild who do things for you and potentially cause problems. There should be some liability for that. You should be incentivized not to do such things, and companies should be incentivized not to release such things.

I’ve been thinking about liability issues in the last few months and have concluded that the court system is going to really struggle. If something happened with Manus, there’s the user who prompted it, multiple LLMs behind the scenes, and a Chinese company that is almost certainly not subject to a legally cognizable claim, unless you want to go to court in Beijing. How is the American tort liability system going to figure this out? I’m skeptical it will do a very good job.

But no liability is a moral failing, too. As the cost of cognitive labor declines, one of the only things left with economic value is trust, pricing risk, and similar concepts. I wonder if frontier AI companies will slowly converge to being more like insurance companies or financial services companies. Those industries are based on trust, pricing of risk, and allocation of responsibility for harm that occurs from realized risks. That feels like what’s economically valuable here, certainly not selling marginal tokens.

Shawn Wang: There’s one proof point that maybe agrees on some level: we’ll never get the O3 API because OpenAI is choosing to release products instead of APIs. That makes sense if you believe your APIs are valuable — you stop giving them to everyone else. It also stops the Manuses of the world in their tracks, because they can no longer use those APIs.

In broadening this general safety discussion, this is just an argument for American AI accelerationism. The simple fact is, if you are more safe and stop yourself from doing anything, then China will do it first, and you’re behind. It’s better to be ahead and in control of the narrative, build in the safeguards at the LLM layer with the post-training that you do, and try to lead from the front instead of the back.

Rohit Krishnan: I have a more contrarian view. Even framing this in terms of safety is incorrect. What are we talking about today? The Manus of today, Operator of today — these aren’t safety concerns. They’re engineering concerns, misuse concerns. We’re using the 2023 version of AI safety, which seeps into every part of the “anything a model can do can be unsafe” conversation, and that distorts how we discuss what these products do.

As Dean said, the liability issue is important once these tools start getting used inside companies. If someone at Pfizer uses Manus to figure something out and creates a wrong drug, there are liability issues. If someone at Cloudflare uses Manus to fix a bug and creates an outage, there are clear questions about where responsibility sits.

But we’re still at the point of making these things work properly in the first place. Think about our example — testing if it can book an Amtrak ticket. We’re not yet at the point where AI agents are so incredibly amazing that we have to restrict them before they engage. I’d like to see them work properly before we leash them.

That doesn’t mean we shouldn’t have a parallel track thinking about liability issues. But these will be hard-won battles that push the frontier forward one issue at a time, rather than “We’ve figured it out for everything from searching medical information to booking tickets to writing open source code or malware."

One problematic outcome of these discussions in recent years is that we’ve conflated all these issues into one, and they’re not the same. I look at Manus and think, “Good. I’m glad somebody without a responsible scaling policy is showing us what can be done,” because there’s no inherent problem with giving something a browser. Yes, there can be prompt injection attacks — that’s new and we need to solve it, but we can’t figure it out without anybody actually doing anything. It’s a chicken and egg issue.

Dean Ball: If you’re a dentist trying to use AI to automate business processes within your dental practice, then the fact that OpenAI has a responsible scaling policy about biological weapons risk evaluation isn’t that important for you. But perhaps more problematically, OpenAI’s model specification says, “Follow the law.” Okay, gotcha.

My view is that we have to almost entirely reject the tort liability system for this because it’s too complicated an issue. This is the kind of situation where transacting parties need to come to agreement about what makes sense in these particular contexts and let contracts do their thing. The courts won’t adjudicate this on a case-by-case basis in any effective way.

The risk of accelerationism is that you accelerate without proper safeguards. Noam Shazeer left Google to accelerate and founded Character.AI. What happened? Character.AI said problematic things to children, made sexual advances to children, and a kid killed himself. I don’t know if you could say Character.AI is responsible for that child’s suicide, but he was talking to the chatbot when he killed himself. That’s a tort case — Tristan Harris is funding it in the State of Florida, with a sympathetic jury and judge.

What’s Character.AI now? It’s a husk. Noam Shazeer’s gone, back at Google, and the company is likely to be picked apart in tort litigation, with other cases against them too.

If you accelerate without figuring this out, something very bad could happen. As they say, bad facts make bad law. Maybe it’s not unambiguously the AI model’s fault, but if there’s a really nasty set of facts, you could get adverse judgments in American courts. The common law is path-dependent, so you could end up with a very bad outcome quickly.

I’m enthusiastic about accelerating adoption and diffusion — Manus is very much a diffusion story. But if we don’t, in parallel, work on risk assignment (not catastrophic risk safety, but determining who is responsible when things go wrong), we could end up in a bad situation rapidly.

Legal Frameworks and Innovation Timelines

Shawn Wang: Do you think it’s primarily financial infrastructure that is needed, like your model of AI companies as insurance companies?

Dean Ball: Legal and financial, yes. What you basically need is a contracting mechanism that is perhaps AI-enabled — AI-negotiated contracts, perhaps AI-adjudicated contracts so you don’t have to deal with the expense of the normal court system. Once you have contracts and liabilities on the balance sheet, you’re in derivatives territory.

It’s a New York problem, not a San Francisco problem at that point. This is certainly an AGI-pilled idea. I wouldn’t do this with Claude 3.7, even though I think it’s great, but I think we could get there in the next couple of years when models are capable of doing things like this.

This is just one approach, certainly not the only one, and it’s a nascent idea for me. But this could be where the money actually is — pricing risk and transforming risk is something America is much better at than China. We’re fantastic at that.

It’s weird because many of my Republican friends in DC hate that fact. They view finance as decadent, as does Chairman Xi. But there might be trillions of dollars of wealth to be created here.

Shawn Wang: Any financial asset is based on the laws it’s grounded in, and I think the laws have to be figured out here. There’s a bit of a chicken-and-egg situation with that.

Dean Ball: Yes, but if you have contracts, contracts would form a substantial part of the law.

Shawn Wang: They still need to be litigated. One measure I’d be interested to plot is AGI timelines versus legislative timelines. We’re accelerating in AI progress and decelerating in law and Supreme Court resolutions of cases. Our legal infrastructure needs to keep up with AI progress, or we’re in serious trouble.

Dean Ball: I completely agree. That’s the problem I try to get my head around all the time.

Jordan Schneider: We saw the EU just try and completely fall on their face, which was not a good first effort for democracies.

Dean Ball: The problem is you don’t want to create a statute prematurely — a statute with a bunch of technical assumptions embedded in it prematurely. It’s a very narrowly targeted thing. For me, this is all clicking into place, and I think if we got this done in the next two years, we’d be fine.

Jordan Schneider: The future of agents in China is going to be really interesting. There was an argument two years ago that LLMs would have a hard time gaining traction in China because the government would worry about aligning them to avoid anti-party statements. But this is basically a solved problem.

I’m curious about your perspectives on the technical challenge — not just at a legal level of assigning blame, but at a product and operational level of building things that governments and large companies will be comfortable with. Is this just a matter of time? Is there anything fundamentally difficult requiring major breakthroughs? Once we have the technology to make Operator and Manus do really good things, will they be controllable as well?

Dean Ball: I’d be curious if you’d correct this assumption if I’m wrong, Jordan, but my impression of China is that it’s actually a somewhat more ice-cold libertarian country when it comes to liability issues, where there’s a greater “developing country” or “these things happen” mentality.

Jordan Schneider: Yes, until bad things happen, and then your company gets shut down.

Dean Ball: It’s more of a binary outcome.

AI and the Future of Work

Jordan Schneider: Let me take this in a different direction. JD Vance at Paris said, “We refuse to view AI as a purely disruptive technology that will inevitably automate away our labor force. We believe and we’ll fight for policies that ensure AI is going to make our workers more productive. We want AI to be supplementing, not replacing work done by Americans.”

Shawn Wang: This is something AI engineers worry about a lot. A surprising number of them are actually worried for their own jobs, which is very interesting.

The main question is whether you have a growth mindset or a fixed mindset view of the world — whether you believe human desires tend to expand over time. Whenever we reach a certain bar, we immediately move that goalpost one football field away. The idea is that, yes, AI will take away jobs that exist today, but we will create the jobs of tomorrow, and ideally those are the jobs we want to do more of anyway.

Rohit Krishnan: I agree. To a large extent, that sentiment is the most normal politician statement in the world — technological growth is great and will continue making everyone’s lives better. It’s the same thing people have said for a very long time.

The difference here is that there’s at least a contingent of people who look at that and say, “No, this time it’s different.” You might say it’s not disruptive, but it could be massively disruptive in a short period of time to a large segment of society. It’s not just agriculture getting mechanized, but potentially all white-collar jobs.

When I’ve examined this issue, I don’t think massive disruption will happen immediately. The technological, regulatory, and sociological barriers are large enough that we won’t all be unemployed in five years. There are enough things to do. As Shawn mentioned, we’ll have to address the inevitable complications of regulatory frameworks before these technologies can be deployed everywhere.

When I did some rough calculations, we’ll still be bottlenecked by chips and energy in 10 years, which will prevent us from replacing all labor with AGI or AI agents. Does that mean there will be no disruption? Absolutely not. I fully expect disruption.

We already have AI that can plausibly replace large chunks of specific white-collar tasks that I do, you do, legislators do, or Supreme Court justices do. Pick your poison — we could probably replace a chunk of these roles with Claude 3.7 and get better results. We’re already in that world, but complete transformation won’t happen immediately.

JD Vance has to toe the party line: anti-AI safety, pro-acceleration, technological optimism all the way. I support that approach, but I’m not parsing his statement with any deeper meaning than that.

Dean Ball: I find myself more worried about slow diffusion due to multiple factors. The bottlenecks are regulatory, but there are many other bottlenecks as well. I’m much more concerned that diffusion and actual creative use will be slowed. I worry about the uses of LLMs that no one has ever thought of, and I’m concerned that no one will ever think of them. That’s probably the bigger issue we should be addressing through public policy.

In the longer term — and in AI time, that’s about three years — I do think there’s a possibility that some elite human capital might get automated in different ways. Political instability tends to emerge when you have an overproduction of elites in a society. We already have that problem. We’ve already significantly overproduced elites in America, and I worry that will get worse. When you combine that with other political problems America faces, you could have a tipping point phenomenon.

I wouldn’t dismiss it entirely as a risk, but my default assumption would be that the risk is actually on the other side — not diffusing fast enough.

Rohit Krishnan: I have a thesis that I sometimes hold that markets that become extremely liquid end up with polarized outcomes. We’ve generally seen that with capital markets — globalization has meant some companies get extremely large while the middle gets decimated, which is why most gains come from the Magnificent Seven.

We could easily see something similar happen in labor markets. We already see flashes of it. Engineering salaries have a somewhat bimodal distribution. Lawyers experience it too. Once AI enters the picture, we might have a vastly more liquid labor market than ever expected. This sounds nice, except it results in a power law distribution. Polarization is difficult to address in domains we don’t know how to handle cleanly or where we can’t easily establish minimum thresholds.

Jordan Schneider: Any closing thoughts?

Shawn Wang: [In true professional podcaster fashion…] I don’t know if we’ve answered the question that you’re likely to put in the title of your episode: “Is this a second DeepSeek moment?” For what it’s worth, my answer is no.

Dean Ball: I agree. In some sense, I think it’s actually more interesting than DeepSeek. And in another sense, it’s certainly not as impressive of a technical achievement as DeepSeek.

Rohit Krishnan: I’ll argue for yes, because I think DeepSeek was a DeepSeek moment for core research talent. Manus is closer to DeepSeek for product. I’m glad we’re pushing a second boundary as opposed to pushing the same boundary.

On Rohit’s point about Claude 3.7 being able to replace huge swaths of white collar work—I absolutely agree, and I’ve already baked 3.7 into chunks of my personal workflow. But IT departments—especially in the public sector and in utilities—are both paranoid and too busy to think. Many won’t ALLOW LLM systems to touch proprietary data until a big dog vendor (Microsoft, Oracle) allows it.

I think Microsoft Copilot would need to be auto-bundled into Office 365 to REALLY see mainstream AI adoption (as a product) outside tech spaces.

Extremely helpful, thanks.

Excellent quote from Dean Ball: "The Western AGI obsession makes us want to conceptualize this as one godlike model that can do everything, and we implicitly dismiss product engineering and practical applications. You see that reflected in public policy, which is obsessed with big models, giant data centers, and similar infrastructure. Those are the only things we seem to take seriously and value."

While the AI research race continues unabated, China's emphasis on production may be more of a determining factor over the next couple of years. To include the robotics revolution, which is the latest China Talk I need to work my way through (I can only digest so much at a time!).