Ray Wang is a Washington-based analyst formerly based in Taipei and Seoul. He focuses on U.S.-China economic and technological statecraft, Chinese foreign policy, and the semiconductor and AI industry in China, South Korea, and Taiwan. You can read more of his writing on his Substack: SemiPractice or @raywang2.

Key Takeaways

Nvidia’s H20 GPU with HBM3 had become the most sought-after accelerator in China amid rapidly increasing inference and computing demand. Prior to the new restrictions, shipments were projected to reach 1.4 million units in 2025.

CXMT is now only 3-4 years behind global leaders in high-bandwidth memory (HBM) development, aiming to produce HBM3 in 2026 and HBM3E in 2027 amid notable technological improvements in DRAM.

CXMT still faces major roadblocks — these include U.S. export controls on lithography and other equipment, a volatile geopolitical environment, limited access to global markets, and the uncertain pace of technological development against market leaders.

Greater Demand for HBM and Nvidia’s H20

In December 2024, the U.S. released new export control packages targeting Chinese access to high-bandwidth memory, or HBM, and various types of semiconductor manufacturing equipment, including tools essential for HBM manufacturing and packing. The new rule also added over 140 Chinese chip manufacturers and chip toolmakers to the Commerce Department’s Entity List.

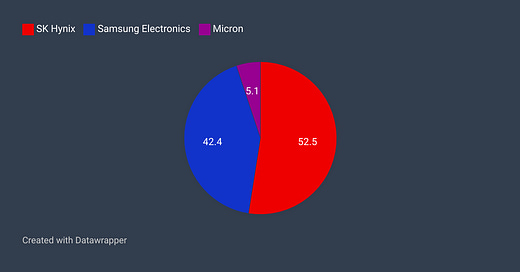

The new rule was designed to further constrain China’s AI development by leveraging the chokepoint on HBM, ultimately controlled by three companies around the world — SK Hynix, Samsung, and Micron. The restriction around SMEs, on the other hand, aims to limit China’s ability to develop its own HBM.

HBM powers almost all of the AI accelerators that train large language models. It has become even more important since the rise of reasoning models and inference training, where memory bandwidth and capacity play a vital role.

The Chinese AI accelerators are no exception when it comes to reliance on HBM. For example, Huawei’s latest AI accelerators, the Ascend 910B and upcoming 910C GPUs, are mainly equipped with 4 and 8 HBM2E, respectively, mostly sourced from Samsung before the December 2024 restriction went into affect, with some sourced after the restriction. Similarly, other Chinese GPU makers such as Biren, Enflame, and VastaiTech are likely using HBM2 and HBM2E from either SK Hynix or Samsung. Biren’s BR100 GPUs that launched in 2022 incorporate 4 HBM2E, and Enflame’s DTU released in late 2021 uses 2 HBM2.

Amid the rapid development of advanced reasoning models in China — including DeepSeek R1, Alibaba’s QwQ-32B, Baidu’s Ernie X1, Tencent’s Hunyuan T1, and ByteDance’s Doubao 1.5 — China’s demand for inference training and overall compute has been accelerating. This trend began as early as late January, and in turn is driving demand in the Chinese market for accessible GPUs with the most advanced HBM, namely the Nvidia H20.

The H20 was by far the most advanced and accessible foreign GPU available to the Chinese market. While Huawei’s Ascend 910B — the domestic alternative — offers performance roughly on par with the Nvidia A100, it delivers only about 40% of the H20’s performance in a cluster configuration. Its latest GPU Ascend 910C, is competitive in both computational and memory performance. While 910C’s scale of adoption in China’s AI industry remains unclear, it is gaining more attention. Beyond raw metrics, Chinese firms continue to prefer Nvidia hardware for now due to their engineers’ deep familiarity with its mature and widely adopted software ecosystem, as well as its superior reliability and efficiency in large-scale cluster environments backed by reliable supply chain partners.

While the performance of the H20 is about 6.7 times less powerful compared to Nvidia’s flagship H100 in terms of computational performance, it does provide larger memory bandwidth and capacity. Thus, the H20 was preferred for inference training over the H100, a key factor driving its demand in China.

Multiple media sources have recently reported the rising demand for Nvidia’s H20, and several supply chain checks by the author confirmed this trend as early as the beginning of February. That marks a shift from my earlier supply chain check based on data from mid-January, which suggested a significant decline for H20 orders likely due to concern over a potential ban on the H20. We should also not rule out the possibility that the fast-increasing demand for H20 was driven by the looming concerns of H20 restrictions since as early as the late Biden administration.

According my calculation in early March through downstream supply chain checks (advanced packaging and chip testing), Nvidia was on pace to ship roughly 1.4 million H20s in 2025. China could have obtained about 600,000 H20s by June based on a separate calculation by the author. Notably, since the H20 started shipping in Q2 2024, China has obtained more than 1 million units.

The rising demand for inference and computing power also aligns with the projected annual spending of China’s top cloud service providers. Companies like Tencent, Baidu, and Alibaba are expected to spend a combined $31.98 billion in 2025—a nearly 40% increase from 2024.

Nonetheless, the H20 was officially banned effective last week. According to Nvidia’s recent 8-K filing to the SEC, the company states that the U.S. government has informed the company that selling the H20 — or any chip matching its memory bandwidth, interconnect bandwidth, or both — requires a license. In other words, Nvidia will not be able to sell H20 or any more powerful GPUs to China as it is unlikely to obtain a license for the Chinese market.

Chinese Memory Advancements

The surge of demand for Nvidia’s H20, the critical role of HBM, and the growing emphasis on both reasoning models and inference training all ultimately point to HBM’s strategic importance to China’s AI sector.

Certainly, the Chinese government and industry are well aware of the importance of HBM in the midst of an increasingly unfavorable regulatory and geopolitical environment around computing resources and associated AI hardware. For China, there is a strategic urgency and necessity to develop its indigenous HBM to shake off the existing restrictions from the United States and enhance their capability for developing AI.

Knowing HBM’s strategic role in China’s AI ambitions amid escalating U.S.-China tech competition, it is essential to map China’s HBM development as precisely as current data allows.

My best guess is China’s HBM development trails market leaders by roughly only four years amid increasing export controls — a narrower gap than previously estimated or widely expected, despite ongoing challenges and uncertainties.

In the second half of 2024, reports indicated that CXMT — China’s leading DRAM and memory maker — has begun mass production of HBM2, placing it roughly three generations behind market leaders, which have been supplying HBM3E 8hi and 12hi (the most cutting-edge HBM in the market to date) to leading AI chip vendors such as Nvidia, AMD, Google, and AWS since 2024. My retrospective analysis of the HBM roadmaps of these four companies in December suggested that CXMT lags behind the market leaders by approximately six to eight years, with various challenges to overcome.

A six-to-eight-year lead — a rather reassuring gap for policymakers in Washington and industry leaders in this space — may no longer hold true given the fast-evolving industry developments.

The latest sources suggest Chinese memory makers have improved their HBM technology faster than previously projected. As of today, CXMT is reportedly working on HBM3 and planning for mass production in the following year. This narrows the gap between CXMT and HBM leaders to about four years. Moreover, the firm also plans to announce HBM3E and push for mass production in 2027, according to Seoul-based Hyundai Motor Securities. If true, the gap will be three years instead of four.

At SEMICON China 2025, held from March 26 to March 28, over 1,400 domestic and international semiconductor firms gathered alongside senior Chinese government officials. There, much of the media spotlight focused on Chinese advancements in lithography and other semiconductor manufacturing equipment (SME) like the SiCarrier.

What was not covered, however, was Chinese memory advancement. Analysts attending the event expect rapid advancement in HBM through 2025, noting that HBM3E is the primary target specification for domestic HBM firms. While this does not indicate that China is on the verge of successfully developing HBM3E, the fact that domestic firms are actively developing around this specification implies meaningful progress in Chinese HBM technologies. At a minimum, it signals that China’s memory industry is working on the most cutting-edge HBM — a development that aligns with the note earlier.

If CXMT manages to bring HBM3E to market by 2025 — or even 2026, which the author views as unlikely — it would mark a major milestone in China’s push for semiconductor self-sufficiency and send shockwaves through both the global memory industry and policy circles. This scenario, however, is not entirely out of reach. SemiAnalysis projected in January 2024 that “CXMT’s HBM3E for AI applications could begin shipping by mid-2025.”

If CXMT rolls out less advanced HBM3 late in 2025 or 2026, even that would be surprising to many given the pace of progress thus far. It is important to remember that, unlike its legacy memory competitors, CXMT has only been established for 9 years, and its HBM2 only entered mass production last year. Not to mention the fact that the firm has been impacted by a series of export controls for several years.

From a technical standpoint, CXMT appears increasingly capable of producing the DRAM die for both HBM2E and HBM3, building on its progress in DRAM. The company is currently able to manufacture DRAM at the D1y and D1z (17 nm - 13 nm) node — technologies that are used in these two generations of HBM. Another leading technology consultancy, TechInsights, confirmed in its January analysis that CXMT is capable of manufacturing DDR5 at the D1z node (approximately 16nm). The density of CXMT’s DDR5 is comparable to that of leading global competitors in 2021 — Micron, Samsung, and SK Hynix — though the chip exhibits a larger die size and an unverified yield rate.

CXMT’s R&D team is likely developing sub-15 nm DRAM nodes, specifically the D1α and D1β (14–13nm), which are essential DRAM nodes for HBM3E. Although CXMT will likely face major challenges in developing D1α nodes without Extreme Ultraviolet Lithography (EUV), it is not impossible to develop D1α DRAM nodes without EUV. In 2021, Micron debuted its D1α DRAM without the use of EUV, paving a potential track for CXMT to duplicate.

Taken together, the author believes the more realistic assessment is that CXMT is currently developing HBM3 with the expectation for mass production to begin in the first half of 2026. For HBM3E, it remains too early for now to make a decisive call given its progress in DRAM and limited information on this specification.

It is worth noting that if CXMT develops HBM3 or HBM3E, careful evaluation of its overall performance and compatibility with large language model training will be essential. Past experience shows that not all HBMs within the same generation are created equal. Samsung’s HBM3E 8hi and 12hi have struggled to pass Nvidia’s qualification test as a supplier for its high-end GPUs over the past two years.

One might ask why CXMT isn’t pursuing HBM2E, which appears to be the logical next step on its technology roadmap. The likely reason is market timing: most domestic GPUs are already equipped with HBM2E, meaning limited commercial opportunity would remain by the time CXMT’s version reaches mass production.

Given Nvidia sold over one million H20 chips with HBM3 (or reported “H20E” with HBM3E) to Chinese AI firms before the ban, Chinese GPU firms must continue to compete against Nvidia’s product in the short and medium term. As such, it makes strong business sense for CXMT to prioritize advanced memory technologies like HBM3 and HBM3E, supplying domestic GPUs with more competitive HBM.

Strategically, staying competitive in the fast-moving AI chip space requires CXMT to pursue a leapfrogging strategy — aligning its products with the memory demands of domestic GPUs and ASICs to secure both domestic relevance and potential global competitiveness. For example, Huawei’s Ascend 910C — and future iterations — will almost certainly seek to upgrade from the 910B’s HBM2E to HBM3 or HBM3E, improving its memory performance and overall competitiveness.

The Multi-Dimensional Challenges

To be sure, there are multi-front challenges awaiting CXMT and other Chinese memory firms despite the improvement.

First, the restrictions around semiconductor manufacturing equipment will continue to hinder CXMT’s development. Although CXMT stockpiled enough semiconductor manufacturing equipment for HBM and DRAM production — likely sufficient to sustain operations through 2026 or 2027 — both existing export controls will still limit its ability to develop and scale advanced DRAM and HBM production in the coming years. For example, the December export controls restricted equipment critical to HBM manufacturing and packaging processes — including tools for through-silicon via (TSV), etching, and related steps. On top of that, the maintenance personnel from U.S. semiconductor equipment firms embedded at CXMT have been instructed to leave the company amid the tightening restrictions, affecting its development in DRAM and HBM.

Second, while the author outlines a potential path for CXMT to advance below the 15nm node without EUV, it is likely that adopting EUV will become inevitable for the development of cutting-edge DRAM and HBM, which is the case for other memory giants. Without EUV, CXMT could face challenges similar to those encountered by SMIC in recent years, in a way that struggles to improve yield, die size, and scale production. This choke point could continue to be the key roadblock for CXMT’s pursuit of cutting-edge DRAM and HBM.

Third, CXMT’s access to the global HBM market will likely remain limited for at least the next few years, capping its role in the global AI hardware supply chain. With a multi-year technology gap, its HBM offerings are unlikely to be adopted outside China, where buyers have access to more advanced alternatives. Moreover, ongoing U.S.-China tensions and existing and potential restrictions will deter foreign firms from adopting CXMT’s HBM for their AI accelerators, even if its products become technically competitive, due to fears of geopolitical fallout.

That said, if CXMT manages stable, scaled production of low to mid-end HBM at highly competitive prices, it could at minimum press the gross margin for other HBM players in the global market and secure some market share.

Fourth, the Entity List will limit firms’ commercial activities. Since 2020, the U.S. government has placed 768 Chinese entities on the Entity List, including industry major players such as Huawei and Chinese NAND leader YMTC. While CXMT is still notably unlisted, the author understands that there has been constant discussion in Washington about adding CXMT to the Entity List since January, posing a major risk for CXMT in the coming months and years.

Together, these hurdles could reshape the gap between CXMT and industry leaders in the years ahead. Still, if CXMT maintains its current momentum, its technological progress could further narrow the gap with leading players in the HBM market.

Lastly, it is uncertain whether CXMT can keep pace with industry leaders by consistently refreshing its product lineup generation after generation. Companies like SK Hynix are moving aggressively, launching HBM4 this year and planning HBM4E, which aims to fulfill the need of Nvidia’s rapidly evolving product line. Can CXMT keep up amid the current technological gaps and challenges? I will leave that question open for debate.

*The author would like to express sincere appreciation to those who provided valuable feedback on this piece, including Lennart Heim (RAND), Sravan Kundojjala (SemiAnalysis), Kyle Chan (Princeton University), and Sihao Huang (Oxford University).

*Acknowledged Limitation: This article does not delve into several critical processes in HBM manufacturing and packaging, such as through-silicon via (TSV), bonding, and related steps, which the author acknowledges should be included in the discussion of China’s HBM development. That said, the author believes these areas may present fewer technological hurdles for Chinese firms compared to the more complex challenges discussed in this analysis.

The author also acknowledges the inherent difficulty of projecting China’s HBM trajectory, given the dual constraints of limited public data and the rapid, often opaque nature of technological advancement within China’s semiconductor ecosystem. In recent private discussions with Korean analysts covering the memory sector, we echoed shared concerns over this persistent information asymmetry and the limited transparency — factors that inevitably affect the precision of any external assessment.

That said, this analysis is formulated based on both credible public sources and private insights, benchmarked against known technical progress and industry developments. While not exhaustive, it provides a meaningful perspective on China’s evolving position in advanced memory technologies and the broader implications for global semiconductor dynamics.

Good article on a very tricky topic.

My gut tells me that they will continue to make progress on HBM, as they have exceeded expectations on DDR4 and will likely do so on DDR5 too later this year. Although today’s proper equipment bans may finally hurt them.

But in one respect I think things will change. In the past CMXT (and YMTC) regularly trumpeted any progress they claimed to have made. In future it may be better for them to say less about any progress in order to avoid any unwanted attention.