Off all the wild moves we’ve gotten out of this Administration so far, basic science funding could be the dumbest and hardest to reverse.

does a great job with the basic plot.I’d like to spotlight the newest NSF directorate, Technology, Innovation and Partnerships (TIP) created by the CHIPS & Science Act, that has been particularly hard-hit by DOGE. The idea was to supplement the world-class basic research that NSF does with more use-inspired and translational research with higher technology readiness levels. I’ve been following this directorate since its creation, recorded a panicked emergency pod when for a hot minute Senate Commerce almost killed it, and have been really impressed with its work so far.

TIP helped stand up NAIRR, has done a fanstastic job helping catalyze regional innovative hubs, and is the only org I’ve seen in government actually be strategic about workforce development. My personal favorite its new APTO program, which is creating the data and intellectual substrate necessary to really do smart S&T and industrial policy. For more of what TIP has been up to, check out their Director’s annual letter here. I’d also encourage DOGE to have a read of the TIP’s roadmap for the next few years and try to spot stuff that America doesn’t need.

The NSF is not perfect. IFP has some excellent proposals on how to incorporate novel funding strategies like lotteries that need faster adoption. But IFP also recently wrote up how the NSF showed its mettle, and was able to move faster than the NIH for COVID-related grants. TIP in particular has collected some of NSF’s most forward-thinking talent and is experimenting with novel programs and funding strategies faster than anyone else in the NSF mothership.

American basic research is our golden goose and the envy of the world, building the basis for scientific innovations that make us richer, live longer, and make us more powerful. Our universities attract the best minds in the world which is an enormous boon to the country, and absent radical intervention will continue to do so. While the NSF could use reform, we are criminally underfunding R&D already, and firing the most forward-thinking junior staff in the directorate singled out by national security heavyweights as critical to competing with China is an error this administration should correct.

Try Picking on Someone Your Own Size

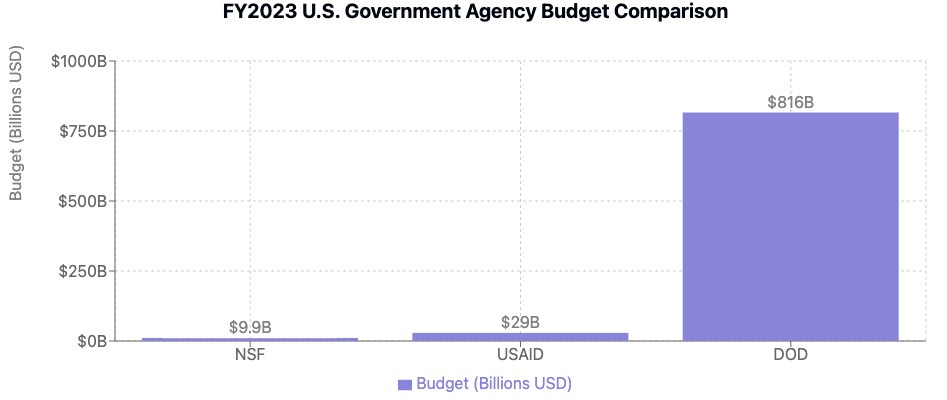

DOGE should really try taking on some government programs that aren’t already running lean, creating the future, preventing pandemics and saving lives. The real discretionary bloat isn’t malaria bednets and fundamental physics research but F-35s and carriers. A real push at a few deadweight DoD programs could deliver way more savings than whatever you can squeeze from NSF and USAID and likely make for a more effective force.

From Jennifer Pahlka:

The only way the DoD was really going to change was through major budget cuts — something that forced people’s hands into new ways of working, into true prioritization, into processes that took less time because they were less burdened by the trappings that come with enormous budgets. I began my comment with an apology to the senior Air Force official sitting next to me, a caveat that I meant no disrespect, and wasn’t arguing for less military might — in fact, what I wanted was a more capable military. To my surprise, he piled on. “She’s right,” he said. “But it has to be much deeper than anything we’ve seen before. We had to cut during the last sequestration, and it was around 15% off the top of everything, which doesn’t force meaningful choices. It needs to be like half.”

To get at wasteful DoD programs and acquisitions regulations this administration would have to do the hard work of wooing Congresspeople into taking votes that would more substantially impact their districts. I hope that Trump 2.0’s staff has the stomach and topcover for this sort of work that could yield real long-term dividends for the country, not just grabbing the lowest hanging political fruit which really even have long term fiscal relevance like cutting probationary employees, foreign aid, and basic R&D.

From a ChinaTalk episode coming out on Monday with Mike Horowitz, former Biden DoD official, and The Economist’s Shashank Joshi:

Jordan Schneider: And I think this is like one of the many shames of the Trump imperial presidency. He has enough control of Congress to do this well and could even get some Dem votes for real defense reform!

Mike Horowitz: Let me muster a point of optimism here. If you look at Hegseth's testimony, his discussion of defense innovation is very coherent. He has takes that are not structurally dissimilar to the ones that we have been making.

There is a potential opportunity here for the Trump administration to push harder and faster on precise mass capabilities, on AI integration, and on acquisition reform in the defense sector. Because the president right now seems to have a strong hand with regard to Congress. Whether the president's willing to use political capital for those purposes is not clear. How the politics of that will play out is unclear. But if the Trump administration does all the things that it says it wants to do from a defense innovation perspective, that may not be a bad thing!

Shashank Joshi: My concern is also that you have people who are good at radicalizing and disrupting many businesses and sectors and fields of life. But the skills that are required to do that are different to the skills in a bureaucracy like this. Because, just because you were able to navigate the car sector and the rocket sector, doesn't mean you know how to cajole, persuade, and massage the ego of a know-nothing congressman who knows nothing about this and who simply cares that you build the attributable mass in his state, however stupid an idea that is, and who wants you to sign off on the 20 million dollars.

I worry that they will either break everything, and I'm afraid what I'm seeing DOGE do right now with a level of recklessness and abandon is worrying to me as an ally of the United States from a country that is an ally, but also that they will just not have the political nous [British for common sense] to navigate these things to make it happen. Just because Trump controls Congress and has sway over Congress doesn't mean that the pork barrel politics of this at the granular level fundamentally change. You need operatives, congressional political operatives. A tech bro may have many virtues and skills, but that isn't necessarily one of them.

Here’s to hoping! Howabout a Washington quote to send us off, from a 1775 letter sent to General Schuyler: “Animated with the Goodness of our Cause, and the best Wishes of your Countrymen, I am sure you will not let Difficulties not insuperable damp your ardour. Perseverance and Spirit have done Wonders in all ages.”

The Death of AI Safety: Moving Past the Pantomime

Tim Hwang is a writer and researcher. Relevant to the NSF topic above, he also hosted a great podcast series on metascience you should check out!

The AI Action Summit, which closed just over two weeks ago in Paris, will be remembered as a historically important gathering — though not how many of its organizers, attendees, and contributors anticipated. Rather than cementing AI safety as a priority for transnational collaboration, it turned into a memorial service for the safety era.

Billed as the successor to the high-profile gatherings of leaders that took place in the United Kingdom in 2023 and Korea in 2024, the Summit was originally intended to build on the frenetic activity that has taken place over the last few years to create international machinery for collaboration on AI safety issues. This has included an agreement on statements of principles, the formation of AI Safety Institutes around the world, and a blue ribbon “IPCC-style” report on safety issues.

This Summit’s lasting moments, however, came not from the success of “open, multi-stakeholder and inclusive approach[es]” on safety championed by the official declaration from the event, but instead dramatic declarations of national primacy unshackled by safety concerns. Vice President JD Vance’s speech made little accommodation for either safety or internationalism, declaring that the United States was “the leader in AI and our administration plans to keep it that way,” and that he was not here to talk about AI safety but instead “AI opportunity.” Macron touted a massive €110 billion fund to back AI projects in France, and the United States and United Kingdom declined to sign the Summit’s declaration language. A wildcat “Paris Declaration on Artificial Intelligence” backed by private industry hit the Summit for failing to back a “strong, clear-eyed, and Western-led international order for AI.”

A sense of stuckness prevailed in the side conversations and events taking place throughout Paris. At the AI Security Forum, a slow carousel of speakers ran through very much the same tropes and ideas that had dominated the discourse for years. Shakeel Hashim captured a feeling widely held — that the Summit was a “pantomime of progress” rather than the genuine article.

The photo ops were taken, and the keynotes were done, but the old gestures and governance rituals — which had seemed so potent just a few years ago in Bletchley Park — are now odd anachronisms in the harsh light of 2025.

This isn’t just a vibe. The “AI safety community” has always nurtured a shared, but often unspoken, agreement that public-minded technical expertise and international cooperation were the most promising pathways to promote good global governance of the technology.

But the safety community made a historically bad bet. The wheels were already coming off multistakeholder, international governance in the world at large even as the safety community began to invest in it seriously in the mid-2010s. Resurgent nationalism, great-power competition, and the fecklessness of international institutions have limited options for global governance across many domains, and AI has been just another one of the casualties. This isn’t just about Trump winning: these changes in the international system are structural, and the domestic shifts in places like France and the UK would have led to a very similar result even if Harris had pulled it out last year.

The safety community was also profligate in the use of its attention and social capital. The political influence of fair-minded technical experts turned out to be a rapidly depleting resource, wasted away as one “high-profile letter from very concerned scientists” and “dramatic demo of hypothetical model threat” followed another to little effect.

Against such a backdrop, it’s no wonder that AI safety in 2025 feels ever more like pantomime. We’re still frantically pulling the same levers, even as the whole constellation of forces that move nations in general and technology policy in particular have rearranged.

We need to be asking hard questions. What are historical models for technological safety and stability in a world of fierce, unrestricted nationalism? What happens when scientific evaluation has lost its ability to persuade the policymaker? How do you slow down or stop a technological race-in-progress?

The real intellectual work is now rebuilding a theory for safety that takes these uncomfortable realities into account and builds as best it can around them.

“I hope that Trump 2.0’s staff has the stomach and topcover for this sort of work that could yield real long-term dividends for the country, not just grabbing the lowest hanging political fruit…”

Is there a shred of evidence that Trump 2.0 has the capacity or intelligence to do any such thing? I don’t see it anywhere. I just see naked partisanship and hackery, with a healthy dose of blundering incompetence and vile retribution.

NSF and specifically the regional innovation hubs seem like no brainers to keep.

- how do we escalate and make that happen?