Why Nvidia Keeps Winning: The Rise of an AI Giant

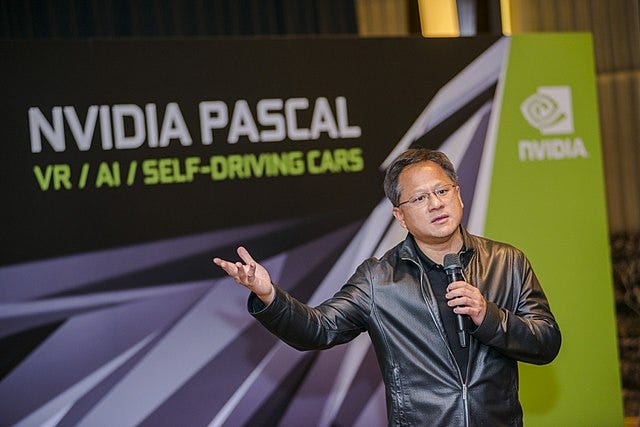

A brief history of the chip industry’s unbeatable “three-headed hydra” and its CEO, Jensen Huang

Doug O’Laughlin is the author of Fabricated Knowledge and has been writing about the interaction between semiconductors and the AI revolution for years. In this interview, we focus on Nvidia — how it rose to prominence, its importance to the large language model revolution, and the corporate and policy implications of its trillion-dollar valuation. Do note we recorded this episode before the latest reporting around possible enhanced chip controls and cloud compute restrictions.

In the conversation below, we cover:

Nvidia’s origin in the graphics card industry, and CEO Jensen Huang’s creation of a GPU ecosystem, which set up Nvidia to become a dominant player;

How the rise of transformer models in AI benefited from Nvidia’s compute and software ecosystem, allowing for larger, more scalable models;

The absence (for now) of foreign and domestic competitors for Nvidia, especially in China;

What US export controls on Chinese hardware mean for US-China AI competition; and

The limits and opportunities that accompany China’s potential access to foreign cloud services.

By the way, I’ll be in SF for Semicon next week! If you’re attending, respond to this email it would be great to meet up.

Chip Off the New Block: Nvidia’s Origin

Jordan Schneider: Let’s start with Jensen Huang, who was born in Taiwan, moved to the US in 1967 at the age of four, and later decides he wants to do computer graphics. Take us from there. Doug, what are the deep origins of Nvidia?

Doug O’Laughlin: Nvidia was truly a fly-by-night thing. They knew they wanted to do graphics cards. There were a few other competitors. The company’s first name had its origin in “NV” or “next version,” the name they gave to all their files. At some point, they had to incorporate and said, “We’re going to do ‘Nvidia’ for the Latin word for ‘envy.’”

They were always just focused on the next chips. The first chip they made is NV1 in 1995. That chip was just a low-level card for the graphics market. This was when the industry was starting to add graphical user interfaces to computers. They partnered with what is now STMicroelectronics to launch their first chip. It was okay. Then they launched their second chip, which was a little better. They skirted bankruptcy.

Then they finally launch this chip called RIVA 128. This chip was Nvidia’s defining moment. Since then, they’ve always been a juggernaut in the 3D graphics industry.

At this point, they’re duking it out with Silicon Graphics, 3dfx Interactive, and S3 Graphics. There are a lot of other companies, but they don’t matter because Nvidia and ATI Technologies — which later is acquired by AMD — are the only two companies that make it through this intense period. There were tons of graphics cards, and Nvidia was the winner of them all.

Around the period of 2000 to 2002, Nvidia becomes the stalwart. It has an amazing series of products and takes a lot of share, usually at the high end of the market.

That is the story of the beginning of Nvidia. It went from a tiny, fledgling, fab-less chip company to making new products and eventually winning its place market share. They became dominant and have held that position in gaming ever since.

Jensen Huang’s GPU Long Game

Jordan Schneider: Nvidia is the king of computer gaming. But that wasn’t enough for the company’s leadership, it seems. How did they take the firm to the next level?

Doug O’Laughlin: Jensen has always been very vocal about accelerated compute. There’s an important shift here. I want to explain the difference between parallel computing and the rest of computing. X86 is one of the CPUs that you’re familiar with. It fetches instructions, does a job, and puts it back. They do that very quickly. GPUs, however, are specifically meant for rendering every single pixel on your screen. Each pixel, color, and location is a parallel problem.

Let’s take 1080p. There are thousands of pixels, and each pixel needs to know how to move and how to change. You can’t just do this with the CPU because it would try to calculate each individual pixel. You need a machine that is extremely wide and parallel so that it can do all these little computations at the same time. That’s how you get a graphic user interface.

Those three pixels, shaders, or calculating triangles are best done by matrix multiplication, which is important for AI. The type of calculation that GPUs were meant for — the graphics processing for the highly parallel calculation of all the pixels — ends up being almost a perfect use case for the primary, heaviest part of AI computing.

Jordan Schneider: Is it just happenstance that the GPUs that render Tribes II are the same ones the deep learning revolution requires? Or is something more fundamental going on?

Doug O’Laughlin: I would say it’s a mix of both. The type of processor ends up being well-suited for gaming. This market has a need that Nvidia can fulfill in the near term, and it can make money the entire way. But Jensen definitely, clearly had his eye on the ball.

He was talking in the 2010s about accelerated computing — about how all the workloads of the world needed to be sped up. Every year he would talk about it. Everyone was like, “Oh, that’s pretty cool.” But every year we would never really see it happen. But Jensen, the entire time, was giving away the ecosystem.

Remember, code is not used to running on a graphics card. It has to be split into small pieces and then fed into the machine parallel.

There’s something called CUDA, which is software that makes code more parallel so you can put it into the GPU.

Jensen thought about this: he knew that if he gave away the software for free, it would create an ecosystem.

He started giving it away for free as much as he could to all the researchers by maybe as early as 2010. He would just give away GPUs and CUDA and make sure all the researchers were working and using GPUs. That way, they would only know how to do their problems on GPUs while optimizing their physics libraries on GPUs.

Jensen had his eye on the ball and knew he was creating an ecosystem and making his product the one to use. He gives it away for free so everyone knows how to use it. Then everyone uses it in their workflows and optimizes around it.

He does this for about a decade. Jensen the whole time looks at these problems and knows these super-massive multiplication problems are the future of big data.

I don’t think that would have been a spicy opinion in the 2010s — that matrix multiplication would be used for very large data sets and hard, complicated problems; that’s not a big leap. But pursuing that path, seeing that vision, and creating the ecosystem around it — giving away a lot of it for free — is how Jensen locked in that ecosystem ten years ago.

Transformers Revolution: More Than Meets the Eye?

Jordan Schneider: How does the revolution in transformers connect to the compute and software ecosystem Nvidia built?

Doug O’Laughlin: Transformers are a specific type of AI model. Each transformer takes a lot of data — say, a phrase or sentence used by a large language model — and puts all the information each word has into a transformer cell.

Transformers don’t perform as well as neural networks do at a small size. (Recurrent neural networks and convolutional neural networks are two examples, and there are other types of neural networks.)

But the one thing transformers do really well: they scale up infinitely. We realized that with the transformer model, we could scale these models much, much bigger. When we scaled them, they improved and continued to improve.

These transformers are the tiny, single blocks of compute that make these models possible. Training and teaching the transformer cell is a guess-and-check process. The computer guesses the outcome, and you check against the actuals. Then it just does this over and over again until it has guessed and checked everything it could within a data set. By doing this, we learn all the relationships.

Now we could take these relationships, and whenever you feed us new information, we can use what we’ve learned from all the information that’s been fed to us and give you a result. That’s the transformer LLM breakdown. It’s guessing and checking, and lots and lots of compute.

Gold in the AI Hills: How Nvidia Got There First

Jordan Schneider: Nvidia has two lines of revenue: gaming and data centers. How has the past quarter answered some questions about Nvidia’s long-term importance?

Doug O’Laughlin: ChatGPT has become a viral and meaningful product. It has millions of users, though its market penetration is still a small percentage of the total.

This AI thing has been happening for a while now. GPT-3 was good. And as you know, if you use the 3.5, it’s a very good model. But we finally hit the tipping point of it becoming a true consumer product. Once people started to consume it, they started to realize its potential. To be clear, it’s still very immature. But one of the things that’s important about this whole story is that we have a roadmap for AI to get better, which is rare.

Transformer models improve as they get larger. Researchers have been making them larger, and they’ve been improving. We’re now at the point where we need new GPUs so we can make larger models that we know are going to be better. That’s how we’re going to improve.

This is the quarter for it because the gold rush is on. The VCs are spending all the money. People are realizing the potential of ChatGPT and the right way to improve these models. There’s a lot of engineering, and there’s a lot of work being done at the data-science level.

The biggest problem — the big-ticket item — for anyone to enter the game is having a lot of GPUs. For example, there’s a private, VC-backed company called Anthropic, and they just raised billions of dollars. They’re going to spend billions of dollars on GPUs.

Let’s compare AI workloads to traditional workloads. A traditional workload, like hosting a website, requires loading the website, then the website pings a web server, and then the web server tells you all the responses. These are really easy problems in terms of compute.

But if you look at AI, it’s totally different. Let’s say you ask AI a question. It runs your thirty-word sentence into its model. Its model has billions of parameters showing the relationships between all these words. Each time you run a ChatGPT query, it costs cents. That doesn’t sound like a lot, but in terms of compute, Googling doesn’t round to a cent. The big difference is that AI is way more compute-intensive. Not only is it compute-intensive, but we know it gets better with even more computing.

Everyone has woken up and said, “We need more GPUs now. It’s a war. For us to win the war, the only way is to buy more picks and shovels. The pick and shovel provider is Nvidia.”

Nvidia’s Customers

Jordan Schneider: Who else needs the type of compute Nvidia is selling?

Doug O’Laughlin: The big ones are Microsoft and OpenAI. There are also a lot of enterprises starting to put real dollars behind this. Salesforce says it will add AI to all our workloads. ServiceNow is investing in AI as well. Enterprises are starting to meaningfully move forward.

Some want to just give the keys of the kingdom to OpenAI because it’s truly the best product right now. But a lot of companies, especially tech companies, are going to want to do it on their own, adding some kind of proprietary difference.

Bloomberg made BloombergGPT, which is trained on financial data. It’s much smaller than OpenAI’s ChatGPT, but it’s better at finance because of that specialization. Everyone wants to bring their own data sets and make their own models built on their proprietary data set, which they believe will outperform publicly available competitors.

This is the gold rush area: everyone is trying to figure out how to best make a model, how to best scale it, and how to make it their own.

Nvidia even sells a managed service to train customer models. Meta is a good example. Right now most of the core investing is coming from big companies at the top trying to create solutions that can be scaled and sold to other companies and individuals. No one knows where the limits of improvement end.

Right now OpenAI is clearly in the lead. But it’s so dynamic that their lead is not guaranteed. So everyone has to invest in it.

Sam Altman said something insane. To make OpenAI viable, they need at least $100 billion. That’s crazy, right? But we know that the models get better if we make them bigger. So the only way we can make them bigger is if we buy a lot more GPUs.

Nvidia’s Competitors (It’s Just Google)

Jordan Schneider: Nvidia can charge enormous margins because they’re largely the only game in town. But there are lots of others who have tried to design their own chips. Why are these options not as good as Nvidia’s?

Doug O’Laughlin: There really is only one real competitor, and that’s Google. I just wrote a piece about this. I talk about the three-headed hydra of Nvidia’s competition.

Nvidia has three important parts of the stack extremely locked up. One is the hardware. Another is the software with CUDA and all the optimization around it. The third is networking and systems.

The order from smallest to largest is software, hardware, and networking. Software is really important. Cerebras is a hardware competitor.

When these AI accelerator companies started to make hardware, one of the problems every single one of these companies ran into was that they didn’t have the software to support the problem. They had to build it. They had to build a software stack out of the box every single time if they wanted to do a new problem.

In that view, AI was going to be relatively fixed. People would know what the right answer would be. But the world was so dynamic. Things changed. GPUs are better than CPUs. They are flexible enough to be used for any matrix multiplication problem. A lot of companies made the bet on convolutional neural networks. But then the transformer model came and completely blew them away.

A lot of the AI hardware startup companies had a good hardware solution, but they didn’t have a good software solution.

Networking is another level. These models are becoming so large — you go and buy a $40,000 GPU, but it’s still not going to be enough to train your model. It’ll take years or months to train a model across tens of thousands of GPUs.

One issue is the interconnect problem. It’s not just how good the software and hardware are — it’s how good the hardware works together in a big system.

The analogy I use is that the pizza is too big to bake in any single oven. For one cohesive model — or pizza — to be finished, you have to cut each pizza into these tiny little slices and each oven is meant to cook just that slice. Then you put them all together into this giant cohesive model.

There is an advantage there called NVLink, and there’s a lot of software optimization to scale up these models so they are larger than just a single GPU. Nvidia has done a really good job at that. The AI hardware companies haven’t offered a good solution.

Now there is one other competitor in town, and that company is Google.

Google has been at the forefront of AI research for a long time. They have a lot of the things Nvidia has, but they are custom, in-house, and proprietary. They don’t sell it as a solution.

TPUs are their hardware, and XLA is their software. They have their own OCI networking product with the models on top of it.

Google right now is probably the closest real competitor that has a complete vertical full stack. Nvidia doesn’t have a full vertical stack because they’re not customer-facing and they don’t make the models. They make some open-source models. They improve the entire ecosystem. But they’re essentially AI as a service, and they’re trying to sell it to people who are making the models.

Google is trying to own the entire stack — consumer to model to hardware to networking — and sell it. So far, Google has truly the most competitive, differentiated offering relative to Nvidia. But no one else has made the solutions that Nvidia does.

There’s a big difference between making a theoretical chip that can solve a model and then you have to troubleshoot it — versus Nvidia, where you could buy 10,000 GPUs and it will work out of the box. That’s a big difference.

Productization — Nvidia has done a really good job at making a product to sell to customers — that’s their big differentiator. The three-headed hydra of Nvidia is hard to compete with.

We saw the AI hardware companies: they tried to make better hardware, but they couldn’t beat them. They tied them on hardware, but they couldn’t beat them on the software. We’re not even talking about the network fee.

You have to beat Nvidia on all three vectors of competition at the same time. If you cut off one head, that’s not going to be enough. You have to cut off all three at the same time, and each of the heads is at the top of the game, leading and getting better.

This is the hard problem.

You have to compete with them on three different fronts, and most companies are lucky to be able to compete with them on one.

Geopolitical GPUs: Nvidia and China

Jordan Schneider: In October 2022, the US Department of Commerce enacted export controls designed to stop PRC-based data centers from acquiring the kind of full-stack compute Nvidia provides. What are the implications of there being no Chinese firms that can compete with foreign compute offerings?

Doug O’Laughlin: Maybe the only company I’ve heard of is Biren, the GPU company. But that product is super behind.

One of the benefits is that the US seems to be ahead in the innovation game. Also, China has a GPT censorship problem.

We can run unfettered as fast as possible, but China just doesn’t have the engineering chops. The October export controls stopped China’s ability to even do that. They can’t make the chips. Everyone is relying on TSMC, and we’ve done as much as we can to cut off China.

On top of that, they can’t really design the chips. Some aspects of EDA have been turned off for them. And we limited the networking aspect of it. Nvidia can sell chips to China, and they do. They probably sell millions and billions of dollars worth of H800 — essentially a limited version of the H100 with a much worse net worth. The specs are kind of similar. It’s probably like a slower bin or something like that.

The Bureau of Industry and Security was thoughtful and said the US can give them as much hardware or as many chips as they want — but China can’t scale up to make these ginormous models if they don’t have the networking. That’s how the United States has hampered and cut off the ability of China to be even in the race.

I’m sure there are domestic companies that are trying to get around this problem because the chips are the same. I’m sure there’s some way to improve the networking and be able to scale it out in an even bigger, better way. It's just not going to be what is available off the chip today.

It’s hard for me to imagine China competing on these large language models because they have their hands tied behind their backs. They can’t scale up to this huge amount. Maybe they could scale up with even more H800s, but it would cost some ridiculous number that is truly mind-boggling. That’s just in the race to buy Nvidia GPUs.

There’s no real domestic competitor that has any kind of shot. I mean, US competitors right now seem to be years behind Nvidia. The Chinese ecosystem is pretty handicapped.

If You Can’t Beat ‘Em, Join Their Cloud?

Jordan Schneider: Chinese firms, however, are not restricted in accessing cloud services overseas. Nothing is stopping a Chinese company from buying top-of-the-line Nvidia compute from AWS. Does that alter the dynamic?

Doug O’Laughlin: For these companies, it matters quite a bit. It’s kind of relegated China to being a consumer, a purchaser. They can only buy the IP, but they can never own it.

The PRC cut the West off from the Chinese internet, so we’re going to cut them off all the AI research and hardware, and now they’ll have to buy it as a service from us. There’s a tit-for-tat going on, and it’s hard to imagine anyone truly competing.

Jordan Schneider: So Chinese firms can’t compete on hardware, but they could train their models on cloud services and then compete with Western firms? They might spend pennies on compute hoping to get dollars of revenue back.

Doug O’Laughlin: Maybe hopefully they can spend pennies to get dollars. But realistically, if I had to guess, they’re going to be spending pennies to make $0.50. It’s going to come in at a lot lower margins than it used to. That’s a big deal.

But if China and the US are in an ideological trade war, why bother at all? It seems that we’re closer to that, so why bother. We have cut off the future of humanity.

The roadmap of technological progress doesn’t seem to be available to China at scale. There was a race between China and the US on AI, but we hampered their hardware and cut off their ability to scale.

Jordan Schneider: This undermines Jensen’s claim that if we don’t let Chinese firms buy semiconductors, we’re going to fall behind. Chinese firms might still access compute, but instead of housing it in Guizhou, they’re just getting it from server farms in Seattle or Singapore.

Is the US comfortable with Chinese firms having relatively unfettered access to these hardware stacks, but just outside of the territory of the PRC?

Doug O’Laughlin: The important part of this whole thing is latency. They can use ChatGPT, but the raw latency is going to be a lot slower than in the United States. We get to use it at a speed of about sixty milliseconds per response. For them, it might be one to two seconds. Some consumers will be able to eat that.

In terms of technological warfare, China will always be cut off from the super high-end stuff because it has to be done. But I don't feel like the US would let them rent models from US companies.

Jordan Schneider: Hopefully AWS knows what’s going on in their servers enough to stop a Chinese drone swarm from invading Taiwan while relying on cloud compute in Singapore.

Doug O’Laughlin: I would think they’re aware enough. But on top of that, the closer compute cluster is going to win because one’s going to be faster than the other. If you have to lease it, it’s a physical problem. If they’re renting AI models in Virginia or North Carolina, wherever the giant server farm is, that’s going to be a 600-millisecond latency versus running it locally in Taiwan. The faster one will win.

Get Your Compute Out of the Clouds

Jordan Schneider: The dream is to have advanced computing directly on your PC or iPhone rather than having to access it via the cloud. Is this sort of thing a threat to Nvidia’s future?

Our conversation continues with a discussion of

The impact of on-device inference on Nvidia’s future

Lessons for founders and policymakers from Jensen Huang’s arc

How Nvidia’s future is intertwined with TSMC’s